|

Visual Servoing Platform

version 3.6.1 under development (2025-02-18)

|

|

Visual Servoing Platform

version 3.6.1 under development (2025-02-18)

|

The Unscented Kalman Filter (UKF) is a version of the Kalman filter that handles non-linearities.

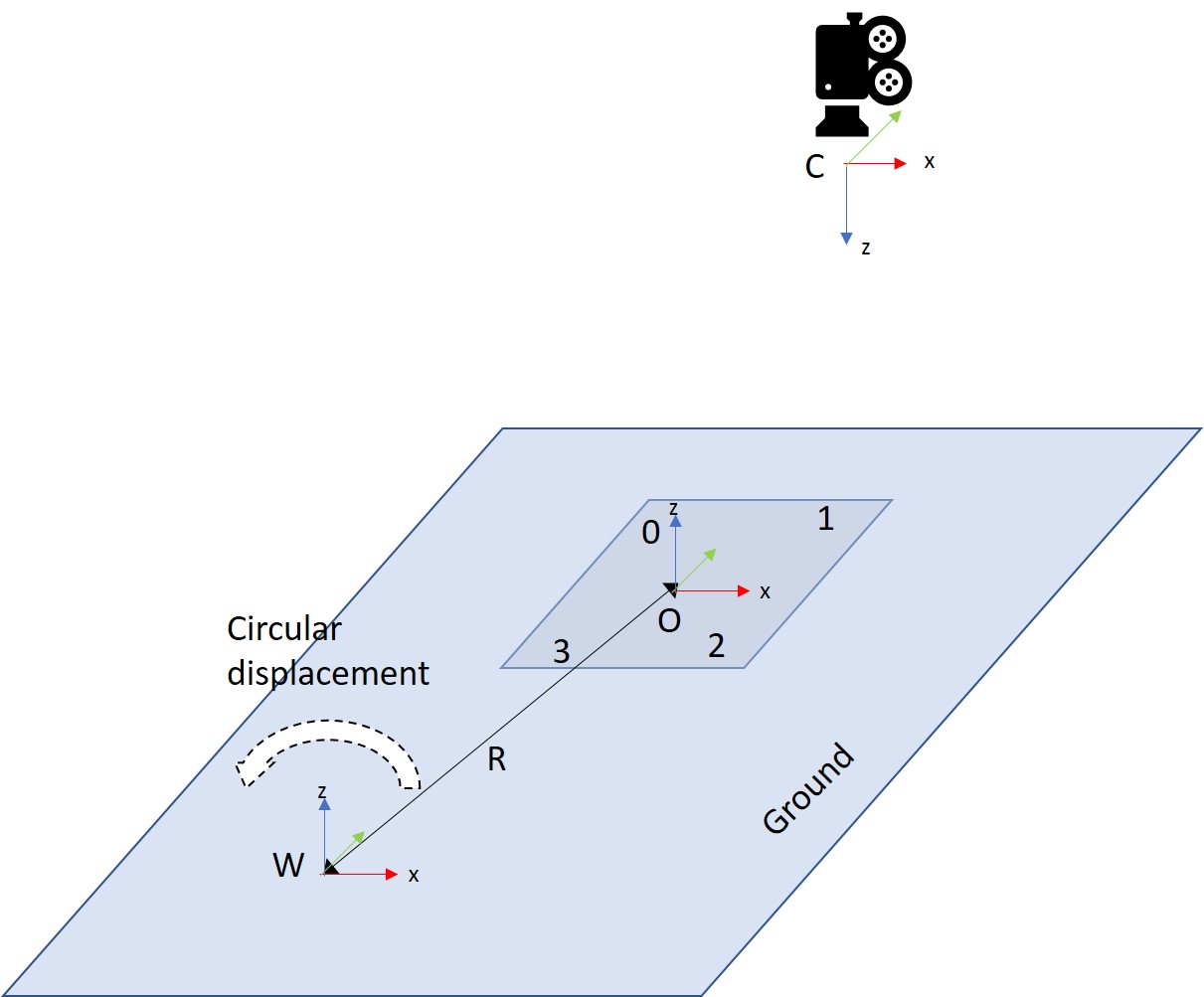

In this tutorial, we will use a UKF to filter the 3D position of a simulated object, which revolves in a plane parallel to the ground around a static point, which is the origin of the world frame ![]() . The coordinate frame attached to the object is denoted

. The coordinate frame attached to the object is denoted ![]() . The object is observed by a static camera whose coordinate frame is denoted

. The object is observed by a static camera whose coordinate frame is denoted ![]() . The object is supposed plane and having four markers sticked on its surface.

. The object is supposed plane and having four markers sticked on its surface.

The equations that describe the motion of the object in the world frame are the following:

![]()

where  and

and ![]() are respectively the pulsation and the phase of the motion, while

are respectively the pulsation and the phase of the motion, while ![]() is the radius of the revolution around the origin of the world frame.

is the radius of the revolution around the origin of the world frame.

The maths beyond the Unscented Kalman Filter are explained in the documentation of the vpUnscentedKalman class. We will recall briefly the important steps of the UKF.

Be ![]() the internal state of the UKF and

the internal state of the UKF and ![]() the associated covariance matrix.

the associated covariance matrix.

The first step of the UKF is the prediction step. During this step, some particular points called sigma points, denoted ![]() , are drawn along with associated weights

, are drawn along with associated weights ![]() for the computation of the mean and

for the computation of the mean and ![]() for the computation of the covariance:

for the computation of the covariance:

![]()

There are different ways of drawing the sigma points and associated weights in the litterature, such as the one proposed by Julier or the one proposed by E. A. Wan and R. van der Merwe in [51].

Be ![]() the vector containing the known commands sent to the system, if any. Then, we pass each sigma point through the process function

the vector containing the known commands sent to the system, if any. Then, we pass each sigma point through the process function ![]() , the command function

, the command function ![]() and the command function depending on the state

and the command function depending on the state ![]() to project them forward in time, forming the new prior:

to project them forward in time, forming the new prior:

![]()

Finally, we apply the Unscented Transform to compute the mean ![]() and covariance

and covariance ![]() of the prior:

of the prior:

![]()

where ![]() is the covariance of the error introduced by the process function.

is the covariance of the error introduced by the process function.

The second step of the UKF is to update the internal state based on new measurements. It is performed in the measurement space, so we must convert the sigma points of the prior into measurements using the measurement function ![]() :

:

![]()

Then, we use once again the Unscented Transform to compute the mean ![]() and the covariance

and the covariance ![]() of these points:

of these points:

![]()

where ![]() is the measurement covariance matrix.

is the measurement covariance matrix.

Then, we compute the residual ![]() of the measurement

of the measurement ![]() :

:

![]()

To compute the Kalman's gain, we first need to compute the cross covariance of the state and the measurements:

![]()

The Kalman's gain is then defined as:

![]()

Finally, we can compute the new state estimate ![]() and the new covariance

and the new covariance ![]() :

:

![]()

To run the tutorial, please run the following commands:

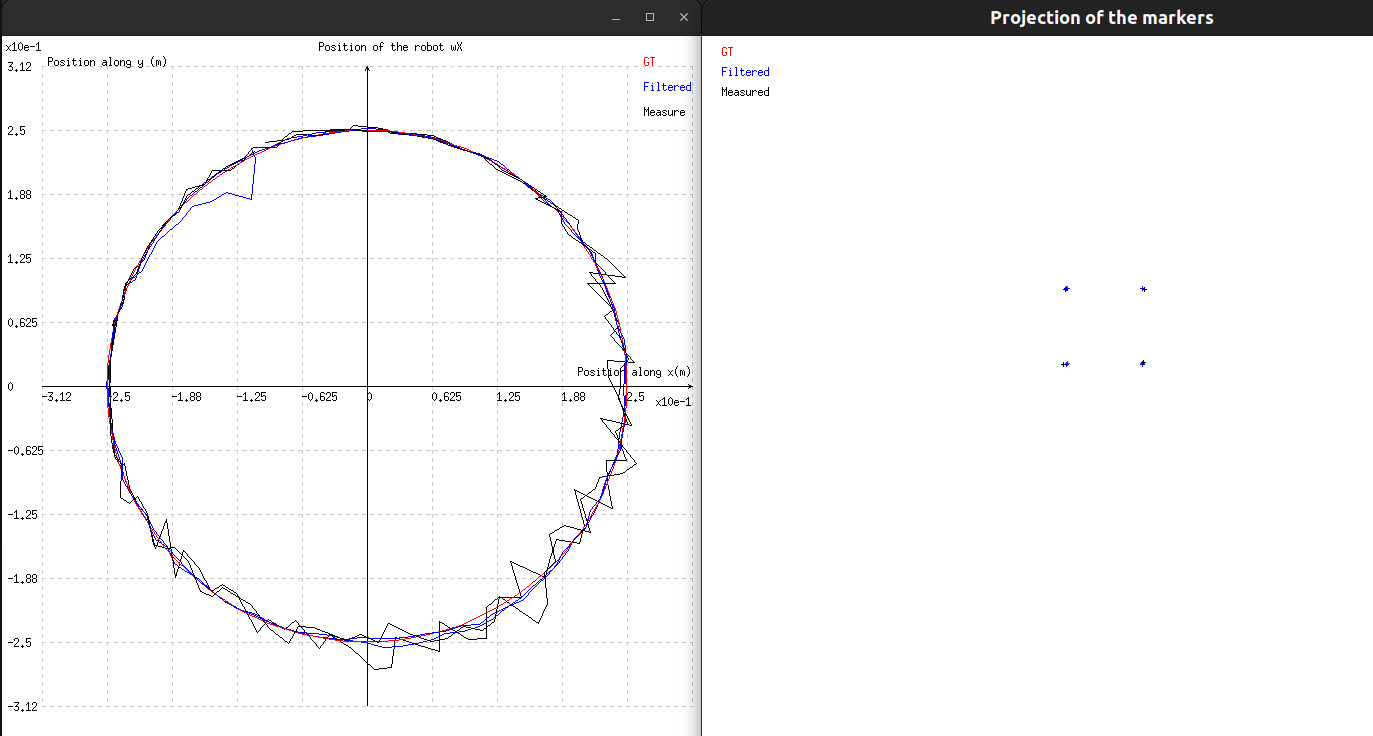

The program does not take any argument. You should see something similar to the following image:

Press Return to leave the program.

For this tutorial, we use the main program tutorial-ukf.cpp . The internal state of the UKF is the 3D position of the object expressed in the world frame, along with the pulsation  of the motion:

of the motion:

![\[ \begin{array}{lcl} \textbf{x}[0] &=& {}^WX_x \\ \textbf{x}[1] &=& {}^WX_y \\ \textbf{x}[2] &=& {}^WX_z \\ \textbf{x}[3] &=& \omega \Delta t \end{array} \]](form_1719.png)

The measurement ![]() corresponds to the perspective projection of the different markers in the image. Be

corresponds to the perspective projection of the different markers in the image. Be ![]() and

and ![]() the horizontal and vertical pixel coordinates of the

the horizontal and vertical pixel coordinates of the ![]() marker. The measurement vector can be written as:

marker. The measurement vector can be written as:

![]()

Be ![]() the camera instrinsic parameters matrix defined by:

the camera instrinsic parameters matrix defined by:

![]()

where ![]() are the coordinates of the principal point and

are the coordinates of the principal point and ![]() (resp.

(resp. ![]() ) is the ratio between the focal lens of the camera and the width (resp. height) of a pixel.

) is the ratio between the focal lens of the camera and the width (resp. height) of a pixel.

Be ![]() the projection matrix that is, in the case of a perspective projection model, given by:

the projection matrix that is, in the case of a perspective projection model, given by:

![]()

The perspective projection ![]() of a point

of a point ![]() is given by:

is given by:

![]()

where ![]() is the homogeneous matrix that expresses the pose of the world coordinate frame

is the homogeneous matrix that expresses the pose of the world coordinate frame ![]() with regard to the camera frame

with regard to the camera frame ![]() .

.

To have a Graphical User Interface (GUI), we include the following files.

To be able to use the UKF, we use the following includes:

To make simpler the main loop of the program, we decided to implement a class that will update the 3D position of the object, expressed in the world frame, in a dedicated class.

As mentionned in The maths beyond the Unscented Kalman Filter, the UKF relies on a process function which project in time the internal state of the UKF.

We want to express the internal state projected in the future ![]() as a function of its previous state

as a function of its previous state ![]() .

.

As stated in the Introduction, the equations of motion of the object are the following:

![]()

Thus, we have:

![]()

Which can be rewritten:

![]()

And can be finally written as:

![]()

This motivates us to choose the following non-linear process function:

![\[ \begin{array}{lclcl} \textbf{x}[0]_{t + \Delta t} &=& {}^WX_x (t + \Delta t) &=& \textbf{x}[0]_{t} cos(\textbf{x}[3]_{t}) - \textbf{x}[1]_{t} sin(\textbf{x}[3]_{t}) \\ \textbf{x}[1]_{t + \Delta t} &=& {}^WX_y (t + \Delta t) &=& \textbf{x}[0]_{t} sin(\textbf{x}[3]_{t}) + \textbf{x}[1]_{t} cos(\textbf{x}[3]_{t}) \\ \textbf{x}[2]_{t + \Delta t} &=& {}^WX_z (t + \Delta t) &=& \textbf{x}[2]_{t} \\ \textbf{x}[3]_{t + \Delta t} &=& \omega \Delta t &=& \textbf{x}[3]_{t} \end{array} \]](form_1754.png)

As the process function is pretty simple, a simple function called here fx() is enough:

The measurements of the projection of the markers in the image are handled by the following class:

It takes as input the camera parameters cam, the homogeneous matrix expressing the pose of the world frame ![]() with regard to the camera frame

with regard to the camera frame ![]()

cMw, the rotation matrix that expresses the rotation between the object frame and world frame wRo and the homogeneous coordinates of the markers expressed in the object frame markers to be able to convert the 3D position of the object in the world frame ![]() into 3D positions of the markers in the camera frame

into 3D positions of the markers in the camera frame ![]() , where

, where  denotes the i

denotes the i ![]() marker sticked on the object. The standard deviation of the noise

marker sticked on the object. The standard deviation of the noise noise_stdev and the seed value seed are here to initialized the Gaussian noise generator used to simulate noisy measurements.

The method state_to_measurement is used to convert the internal state of the UKF into the measurement space (i.e. the projection in the image of the markers sticked on the object if the object is at this 3D position):

The method measureGT is used to convert the ground truth 3D position of the object into ground truth projections of the markers in the image:

The method measureWithNoise adds noise to the ground truth measurements in order to simulate a noisy measurement process:

The method computePose compute the 3D pose of an object from the 3D coordinates along with their projection in the image. Here, we use it to convert the noisy measurements in a noisy 3D pose, in order to compare the 3D position estimated by the UKF with regard to the 3D position we would have if we computed the pose directly from the noisy measurements.

In the main loop of the program, we first declare some constants that will be used later on:

Here is their meanings:

dt is the sampling period (the time spent between two acquisitions), sigmaMeasurements is the standard deviation of the Gaussian noise added to the measurements, radius is the radius of the revolution of the object around the world frame origin, w is the pulsation of the motion of revolution, phi is the phase of the motion of revolution, markers is a vector containing the homogeneous coordinates expressed in the object frame of the markers, markersAsVpPoint is a vector containing the 3D coordinates of the markers expressed in the object (to compute the noisy pose as explained previously), seed is the seed for the Gaussian noise generator that adds noise to the projections of the markers in the image, cMw is the homogeneous matrix expressing the pose of the world frame with regard to the camera frame, wMo is the homogeneous matrix expressing the pose of the object frame with regard to the world frame, wRo is the rotation matrix contained in wMo wZ is the z-axis coordinate of the origin of the object frame expressed in the world frame.To convert the 3D position of the object into the projection of its markers in the image, we need camera parameters. We generate camera parameters for a simulated camera as follow:

To initialize the UKF, we need an object that will be able to compute the sigma points and their associated weights. To do so, we must create an instance of a class inheriting from the class vpUKSigmaDrawerAbstract. In our case, we decided to use the method proposed by E. A. Wan and R. van der Merwe in [51] and implemented in the vpUKSigmaDrawerMerwe class:

The first parameter is the dimension of the state of the UKF. The second parameter is  : the greater it is the further of the mean the sigma points are; it is a real and its value must be between 0 and 1. The third parameter is

: the greater it is the further of the mean the sigma points are; it is a real and its value must be between 0 and 1. The third parameter is ![]() : it is a real whose value is set to two for Gaussian problems. Finally, the last parameter is

: it is a real whose value is set to two for Gaussian problems. Finally, the last parameter is ![]() : it is a real whose value must be set to

: it is a real whose value must be set to ![]() for most problems.

for most problems.

The UKF needs the covariance matrix of the measurements ![]() that represents the uncertainty of the measurements:

that represents the uncertainty of the measurements:

The UKF needs the covariance matrix of the process ![]() that represents the uncertainty induced during the prediction step:

that represents the uncertainty induced during the prediction step:

The UKF needs an estimate of the intial state ![]() and of its covariance

and of its covariance ![]() :

:

Next, we initialize the process function and the measurement function:

Finally, we create the UKF and initialize its state:

If the internal state cannot use the standard addition and subtraction, it would be necessary to write other methods:

If some commands are known to have an effect on the internal state, it would be necessary to write other methods:

If the measurement space cannot use the standard addition and subtraction, it would be necessary to write other methods:

If ViSP has been compiled with any of the third-party graphical libraries, we first begin by initializing the plot that will display the object x and y coordinates expressed in the world frame:

Then, we initialize the simple renderer that displays what the camera sees:

For the initialization of the loop, we initialize an instance of the vpObjectSimulator class that simulates the moving object. Then, we initialize the current ground-truth 3D position of the object expressed in the world frame, which is the frame in which the internal state of the UKF is expressed, as a null homogeneous coordinates vector.

The main loop of the program is the following:

First, we update the ground-truth 3D position of the object based on the simulated time using the following line:

Then, we update the measurement by projecting the 3D position of the markers attached to the object in the image and add some noise to the projections using the following line:

Then, we use the Unscented Kalman Filter to filter the noisy measurements:

Finally, we update the plot and renderer:

First, we compute the noisy pose using the noisy measurements of the markers, to be able to plot the noisy 3D position of the object:

Then, we update the plot by plotting the new ground truth, filtered and noisy 3D positions:

Finally, we update the renderer that displays the projection in the image of the markers:

The program stops once the Return key is pressed.

You are now ready to see the next Tutorial: Using Particle Filter to filter your data.