With ViSP you can track a blob using either vpDot or vpDot2 classes. By blob we mean a region of the image that has the same gray level. The blob can be white on a black background, or black on a white background.

In this tutorial we focus on vpDot2 class that provides more functionalities than vpDot class. As presented in section Blob auto detection and tracking , it allows especially to automize the detection of blobs that have the same characteristics than a reference blob.

The next videos show the result of ViSP blob tracker on two different objects:

VIDEO

VIDEO

Note that all the material (source code and images) described in this tutorial is part of ViSP source code (in tutorial/tracking/blob folder) and could be found in https://github.com/lagadic/visp/tree/master/tutorial/tracking/blob .

In the next subsections we explain how to achieve this kind of tracking, first using a firewire live camera, then using a v4l2 live camera that can be an usb camera, or a Raspberry Pi camera module.

The following code also available in tutorial-blob-tracker-live.cpp file provided in ViSP source code tree allows to grab images from a firewire camera and track a blob. The initialisation is done with a user mouse click on a pixel that belongs to the blob.

To acquire images from a firewire camera we use vp1394TwoGrabber class on unix-like systems or vp1394CMUGrabber class under Windows. These classes are described in the Tutorial: Image frame grabbing .

#include <iostream>

#include <visp3/core/vpConfig.h>

#if defined(VISP_HAVE_DISPLAY) && \

(defined(VISP_HAVE_V4L2) || defined(VISP_HAVE_DC1394) || defined(VISP_HAVE_CMU1394) || \

defined(VISP_HAVE_FLYCAPTURE) || defined(VISP_HAVE_REALSENSE2) || \

((VISP_HAVE_OPENCV_VERSION < 0x030000) && defined(HAVE_OPENCV_HIGHGUI)) || ((VISP_HAVE_OPENCV_VERSION >= 0x030000) && defined(HAVE_OPENCV_VIDEOIO)))

#ifdef VISP_HAVE_MODULE_SENSOR

#include <visp3/sensor/vp1394CMUGrabber.h>

#include <visp3/sensor/vp1394TwoGrabber.h>

#include <visp3/sensor/vpFlyCaptureGrabber.h>

#include <visp3/sensor/vpRealSense2.h>

#include <visp3/sensor/vpV4l2Grabber.h>

#endif

#include <visp3/blob/vpDot2.h>

#include <visp3/gui/vpDisplayFactory.h>

#if (VISP_HAVE_OPENCV_VERSION < 0x030000) && defined(HAVE_OPENCV_HIGHGUI)

#include <opencv2/highgui/highgui.hpp>

#elif (VISP_HAVE_OPENCV_VERSION >= 0x030000) && defined(HAVE_OPENCV_VIDEOIO)

#include <opencv2/videoio/videoio.hpp>

#endif

int main()

{

#ifdef ENABLE_VISP_NAMESPACE

#endif

int opt_device = 0;

#if defined(VISP_HAVE_V4L2)

std::ostringstream device;

device << "/dev/video" << opt_device;

std::cout << "Use Video 4 Linux grabber on device " << device.str() << std::endl;

#elif defined(VISP_HAVE_DC1394)

(void)opt_device;

std::cout << "Use DC1394 grabber" << std::endl;

#elif defined(VISP_HAVE_CMU1394)

(void)opt_device;

std::cout << "Use CMU1394 grabber" << std::endl;

#elif defined(VISP_HAVE_FLYCAPTURE)

(void)opt_device;

std::cout << "Use FlyCapture grabber" << std::endl;

#elif defined(VISP_HAVE_REALSENSE2)

(void)opt_device;

std::cout << "Use Realsense 2 grabber" << std::endl;

rs2::config config;

config.disable_stream(RS2_STREAM_DEPTH);

config.disable_stream(RS2_STREAM_INFRARED);

config.enable_stream(RS2_STREAM_COLOR, 640, 480, RS2_FORMAT_RGBA8, 30);

#elif ((VISP_HAVE_OPENCV_VERSION < 0x030000) && defined(HAVE_OPENCV_HIGHGUI))|| ((VISP_HAVE_OPENCV_VERSION >= 0x030000) && defined(HAVE_OPENCV_VIDEOIO))

cv::VideoCapture g(opt_device);

if (!g.isOpened()) {

std::cout << "Failed to open the camera" << std::endl;

return EXIT_FAILURE;

}

cv::Mat frame;

g >> frame;

#endif

#if (VISP_CXX_STANDARD >= VISP_CXX_STANDARD_11)

#else

#endif

bool init_done = false ;

bool quit = false ;

bool germ_selected = false ;

while (!quit) {

try {

#if defined(VISP_HAVE_V4L2) || defined(VISP_HAVE_DC1394) || defined(VISP_HAVE_CMU1394) || defined(VISP_HAVE_FLYCAPTURE) || defined(VISP_HAVE_REALSENSE2)

#elif ((VISP_HAVE_OPENCV_VERSION < 0x030000) && defined(HAVE_OPENCV_HIGHGUI))|| ((VISP_HAVE_OPENCV_VERSION >= 0x030000) && defined(HAVE_OPENCV_VIDEOIO))

g >> frame;

#endif

quit = true ;

}

else {

germ_selected = true ;

}

}

if (germ_selected && !init_done) {

std::cout << "Tracking initialized" << std::endl;

init_done = true ;

germ_selected = false ;

}

else if (init_done) {

}

}

std::cout <<

"Tracking failed: " << e.

getMessage () << std::endl;

init_done = false ;

}

}

#if (VISP_CXX_STANDARD < VISP_CXX_STANDARD_11)

if (display != nullptr ) {

delete display;

}

#endif

}

#else

int main()

{

std::cout << "There are missing 3rd parties to run this tutorial" << std::endl;

}

#endif

Firewire cameras video capture based on CMU 1394 Digital Camera SDK.

void open(vpImage< unsigned char > &I)

Class for firewire ieee1394 video devices using libdc1394-2.x api.

void open(vpImage< unsigned char > &I)

Class that defines generic functionalities for display.

static bool getClick(const vpImage< unsigned char > &I, bool blocking=true)

static void display(const vpImage< unsigned char > &I)

static void flush(const vpImage< unsigned char > &I)

static void displayText(const vpImage< unsigned char > &I, const vpImagePoint &ip, const std::string &s, const vpColor &color)

This tracker is meant to track a blob (connex pixels with same gray level) on a vpImage.

void track(const vpImage< unsigned char > &I, bool canMakeTheWindowGrow=true)

void setGraphics(bool activate)

void setGraphicsThickness(unsigned int thickness)

void initTracking(const vpImage< unsigned char > &I, unsigned int size=0)

error that can be emitted by ViSP classes.

const char * getMessage() const

void open(vpImage< unsigned char > &I)

static void convert(const vpImage< unsigned char > &src, vpImage< vpRGBa > &dest)

Class that defines a 2D point in an image. This class is useful for image processing and stores only ...

void acquire(vpImage< unsigned char > &grey, double *ts=nullptr)

bool open(const rs2::config &cfg=rs2::config())

Class that is a wrapper over the Video4Linux2 (V4L2) driver.

void open(vpImage< unsigned char > &I)

void setScale(unsigned scale=vpV4l2Grabber::DEFAULT_SCALE)

void setDevice(const std::string &devname)

std::shared_ptr< vpDisplay > createDisplay()

Return a smart pointer vpDisplay specialization if a GUI library is available or nullptr otherwise.

vpDisplay * allocateDisplay()

Return a newly allocated vpDisplay specialization if a GUI library is available or nullptr otherwise.

From now, we assume that you have successfully followed the Tutorial: How to create and build a project that uses ViSP and CMake on Unix or Windows and the Tutorial: Image frame grabbing . Here after we explain the new lines that are introduced.

Then we are modifying some default settings to allow drawings in overlay the contours pixels and the position of the center of gravity with a thickness of 2 pixels.

Then we are waiting for a user initialization throw a mouse click event in the blob to track.

std::cout << "Tracking initialized" << std::endl;

The tracker is now initialized. The tracking can be performed on new images:

The following code also available in tutorial-blob-tracker-live.cpp file provided in ViSP source code tree allows to grab images from a camera compatible with video for linux two driver (v4l2) and track a blob. Webcams or more generally USB cameras, but also the Raspberry Pi Camera Module can be considered.

To acquire images from a v4l2 camera we use vpV4l2Grabber class on unix-like systems. This class is described in the Tutorial: Image frame grabbing .

#include <iostream>

#include <visp3/core/vpConfig.h>

#if defined(VISP_HAVE_DISPLAY) && \

(defined(VISP_HAVE_V4L2) || defined(VISP_HAVE_DC1394) || defined(VISP_HAVE_CMU1394) || \

defined(VISP_HAVE_FLYCAPTURE) || defined(VISP_HAVE_REALSENSE2) || \

((VISP_HAVE_OPENCV_VERSION < 0x030000) && defined(HAVE_OPENCV_HIGHGUI)) || ((VISP_HAVE_OPENCV_VERSION >= 0x030000) && defined(HAVE_OPENCV_VIDEOIO)))

#ifdef VISP_HAVE_MODULE_SENSOR

#include <visp3/sensor/vp1394CMUGrabber.h>

#include <visp3/sensor/vp1394TwoGrabber.h>

#include <visp3/sensor/vpFlyCaptureGrabber.h>

#include <visp3/sensor/vpRealSense2.h>

#include <visp3/sensor/vpV4l2Grabber.h>

#endif

#include <visp3/blob/vpDot2.h>

#include <visp3/gui/vpDisplayFactory.h>

#if (VISP_HAVE_OPENCV_VERSION < 0x030000) && defined(HAVE_OPENCV_HIGHGUI)

#include <opencv2/highgui/highgui.hpp>

#elif (VISP_HAVE_OPENCV_VERSION >= 0x030000) && defined(HAVE_OPENCV_VIDEOIO)

#include <opencv2/videoio/videoio.hpp>

#endif

int main()

{

#ifdef ENABLE_VISP_NAMESPACE

#endif

int opt_device = 0;

#if defined(VISP_HAVE_V4L2)

std::ostringstream device;

device << "/dev/video" << opt_device;

std::cout << "Use Video 4 Linux grabber on device " << device.str() << std::endl;

#elif defined(VISP_HAVE_DC1394)

(void)opt_device;

std::cout << "Use DC1394 grabber" << std::endl;

#elif defined(VISP_HAVE_CMU1394)

(void)opt_device;

std::cout << "Use CMU1394 grabber" << std::endl;

#elif defined(VISP_HAVE_FLYCAPTURE)

(void)opt_device;

std::cout << "Use FlyCapture grabber" << std::endl;

#elif defined(VISP_HAVE_REALSENSE2)

(void)opt_device;

std::cout << "Use Realsense 2 grabber" << std::endl;

rs2::config config;

config.disable_stream(RS2_STREAM_DEPTH);

config.disable_stream(RS2_STREAM_INFRARED);

config.enable_stream(RS2_STREAM_COLOR, 640, 480, RS2_FORMAT_RGBA8, 30);

#elif ((VISP_HAVE_OPENCV_VERSION < 0x030000) && defined(HAVE_OPENCV_HIGHGUI))|| ((VISP_HAVE_OPENCV_VERSION >= 0x030000) && defined(HAVE_OPENCV_VIDEOIO))

cv::VideoCapture g(opt_device);

if (!g.isOpened()) {

std::cout << "Failed to open the camera" << std::endl;

return EXIT_FAILURE;

}

cv::Mat frame;

g >> frame;

#endif

#if (VISP_CXX_STANDARD >= VISP_CXX_STANDARD_11)

#else

#endif

bool init_done = false ;

bool quit = false ;

bool germ_selected = false ;

while (!quit) {

try {

#if defined(VISP_HAVE_V4L2) || defined(VISP_HAVE_DC1394) || defined(VISP_HAVE_CMU1394) || defined(VISP_HAVE_FLYCAPTURE) || defined(VISP_HAVE_REALSENSE2)

#elif ((VISP_HAVE_OPENCV_VERSION < 0x030000) && defined(HAVE_OPENCV_HIGHGUI))|| ((VISP_HAVE_OPENCV_VERSION >= 0x030000) && defined(HAVE_OPENCV_VIDEOIO))

g >> frame;

#endif

quit = true ;

}

else {

germ_selected = true ;

}

}

if (germ_selected && !init_done) {

std::cout << "Tracking initialized" << std::endl;

init_done = true ;

germ_selected = false ;

}

else if (init_done) {

}

}

std::cout <<

"Tracking failed: " << e.

getMessage () << std::endl;

init_done = false ;

}

}

#if (VISP_CXX_STANDARD < VISP_CXX_STANDARD_11)

if (display != nullptr ) {

delete display;

}

#endif

}

#else

int main()

{

std::cout << "There are missing 3rd parties to run this tutorial" << std::endl;

}

#endif

The code is the same than the one presented in the previous subsection, except that here we use the vpV4l2Grabber class to grab images from usb cameras. Here we have also modified the while loop in order to catch an exception when the tracker fail:

try { blob.track(I); }

catch (...) { }

If possible, it allows the tracker to overcome a previous tracking failure (due to blur, blob outside the image,...) on the next available images.

The following example also available in tutorial-blob-auto-tracker.cpp file provided in ViSP source code tree shows how to detect blobs in the first image and then track all the detected blobs. This functionality is only available with vpDot2 class. Here we consider an image that is provided in ViSP source tree.

#include <visp3/core/vpConfig.h>

#include <visp3/blob/vpDot2.h>

#include <visp3/gui/vpDisplayFactory.h>

#include <visp3/io/vpImageIo.h>

int main()

{

#ifdef ENABLE_VISP_NAMESPACE

#endif

#if (VISP_CXX_STANDARD >= VISP_CXX_STANDARD_11)

std::shared_ptr<vpDisplay> display;

#else

#endif

try {

bool learn = false ;

#if defined(VISP_HAVE_DISPLAY)

#if (VISP_CXX_STANDARD >= VISP_CXX_STANDARD_11)

#else

#endif

#else

std::cout << "No image viewer is available..." << std::endl;

#endif

if (learn) {

std::cout << "Blob characteristics: " << std::endl;

std::cout <<

" width : " << blob.

getWidth () << std::endl;

std::cout <<

" height: " << blob.

getHeight () << std::endl;

#if VISP_VERSION_INT > VP_VERSION_INT(2, 7, 0)

std::cout <<

" area: " << blob.

getArea () << std::endl;

#endif

}

else {

#if VISP_VERSION_INT > VP_VERSION_INT(2, 7, 0)

#endif

}

std::list<vpDot2> blob_list;

if (learn) {

blob_list.push_back(blob);

}

std::cout << "Number of auto detected blob: " << blob_list.size() << std::endl;

std::cout << "A click to exit..." << std::endl;

while (1) {

for (std::list<vpDot2>::iterator it = blob_list.begin(); it != blob_list.end(); ++it) {

(*it).setGraphics(true );

(*it).setGraphicsThickness(3);

(*it).track(I);

}

break ;

}

}

std::cout << "Catch an exception: " << e << std::endl;

}

#if (VISP_CXX_STANDARD < VISP_CXX_STANDARD_11)

if (display != nullptr ) {

delete display;

}

#endif

}

unsigned int getGrayLevelMin() const

unsigned int getGrayLevelMax() const

double getEllipsoidShapePrecision() const

void searchDotsInArea(const vpImage< unsigned char > &I, int area_u, int area_v, unsigned int area_w, unsigned int area_h, std::list< vpDot2 > &niceDots)

void setGrayLevelMax(const unsigned int &max)

void setSizePrecision(const double &sizePrecision)

void setGrayLevelPrecision(const double &grayLevelPrecision)

void setGrayLevelMin(const unsigned int &min)

void setHeight(const double &height)

double getSizePrecision() const

double getGrayLevelPrecision() const

void setWidth(const double &width)

void setEllipsoidShapePrecision(const double &ellipsoidShapePrecision)

void setArea(const double &area)

static void read(vpImage< unsigned char > &I, const std::string &filename, int backend=IO_DEFAULT_BACKEND)

unsigned int getWidth() const

unsigned int getHeight() const

VISP_EXPORT int wait(double t0, double t)

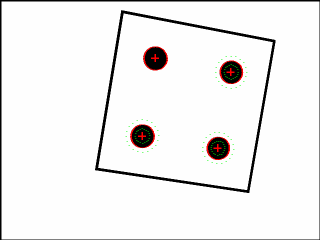

Here is a screen shot of the resulting program :

And here is the detailed explanation of the source :

First we create an instance of the tracker.

Then, two cases are handled. The first case, when learn is set to true, consists in learning the blob characteristics. The user has to click in a blob that serves as reference blob. The size, area, gray level min and max, and some precision parameters will than be used to search similar blobs in the whole image.

if (learn) {

std::cout << "Blob characteristics: " << std::endl;

std::cout <<

" width : " << blob.

getWidth () << std::endl;

std::cout <<

" height: " << blob.

getHeight () << std::endl;

#if VISP_VERSION_INT > VP_VERSION_INT(2, 7, 0)

std::cout <<

" area: " << blob.

getArea () << std::endl;

#endif

}

If you have an precise idea of the dimensions of the blob to search, the second case consists is settings the reference characteristics directly.

else {

#if VISP_VERSION_INT > VP_VERSION_INT(2, 7, 0)

#endif

}

Once the blob characteristics are known, to search similar blobs in the image is simply done by:

std::list<vpDot2> blob_list;

Here blob_list contains the list of the blobs that are detected in the image I. When learning is enabled, the blob that is tracked is not in the list of auto detected blobs. We add it to the end of the list:

if (learn) {

blob_list.push_back(blob);

}

Finally, when a new image is available we do the tracking of all the blobs:

for (std::list<vpDot2>::iterator it = blob_list.begin(); it != blob_list.end(); ++it) {

(*it).setGraphics(true );

(*it).setGraphicsThickness(3);

(*it).track(I);

}

You are now ready to see the next Tutorial: Keypoint tracking .