|

Visual Servoing Platform

version 3.6.1 under development (2024-12-17)

|

|

Visual Servoing Platform

version 3.6.1 under development (2024-12-17)

|

Before following this tutorial, we suggest that you follow Tutorial: Markerless generic model-based tracking using a color camera to learn the bases of the tracker.

This tutorial describes how to extend the generic model model-based tracker implemented in vpMbGenericTracker class using simultaneously multiple camera views acquired by a stereo camera. Our implementation doesn't limit the number of cameras observing the object to track or some parts of the object. It allows to track the object in the images viewed by a set of cameras while providing its 3D localization. Calibrated cameras (intrinsic and extrinsic between the reference and the other cameras) are required.

The mbt ViSP module allows the tracking of a markerless object using the knowledge of its CAD model. Considered objects have to be modeled by segment, circle or cylinder primitives. The model of the object could be defined in vrml format (except for circles) or in cao (our own format).

The visual features that could be considered by multiple cameras are the moving-edges, the keypoints (KLT features) or a combination of them in a hybrid scheme when the object is textured and has visible edges (see Features overview). They are the same than the one used for a single camera.

The vpMbGenericTracker class allow the tracking of the same object assuming two or more cameras: The main advantages of this configuration with respect to the monocular camera case (see Tutorial: Markerless generic model-based tracking using a color camera) concern:

In order to achieve this, the following information are required:

In the following sections, we consider the tracking of a tea box modeled in cao format. A stereo camera sees this object. The following video shows the tracking performed with vpMbGenericTracker. In this example, the fixed cameras located on the Romeo Humanoid robot head captured the images.

This other video shows the behavior of the hybrid tracking using moving-edges and keypoints as visual features.

Next sections will highlight how to easily adapt your code to use multiple cameras with the generic model-based tracker. As only the new methods dedicated to multiple views tracking will be presented, you are highly recommended to follow Tutorial: Markerless generic model-based tracking using a color camera in order to be familiar with the generic model-based tracking concepts and with the configuration part.

Note that all the material (source code, input video, CAD model or XML settings files) described in this tutorial is part of ViSP source code (in tracking/model-based/generic-stereo folder) and could be found in https://github.com/lagadic/visp/tree/master/tracking/model-based/generic-stereo.

To start with the generic markerless model-based tracker using a stereo camera, we recommend to understand the tutorial-mb-generic-tracker-stereo.cpp source code that is given and explained below.

The generic model-based tracker available for multiple views tracking rely on the same tracker than in the monocular case. Our implementation in vpMbGenericTracker class permits to easily extend the usage of the model-based tracker to multiple cameras with the guarantee to preserve the same behavior compared to the tracking in the monocular configuration.

Each tracker is stored in a map, the key corresponding to the name of the camera on which the tracker will process. By default, the camera names are set to:

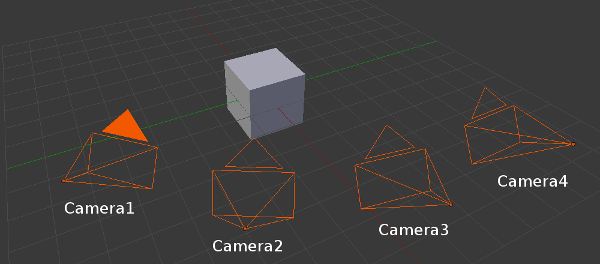

To deal with multiple cameras, in the virtual visual servoing control law we concatenate all the interaction matrices and residual vectors and transform them in a single reference camera frame to compute the reference camera velocity. Thus, we have to know the transformation matrix between each camera and the reference camera.

For example, if the reference camera is "Camera1" ( ![]() ), we need the following information:

), we need the following information: ![]() .

.

The tutorial-mb-generic-tracker-stereo.cpp example uses the following data as input:

teabox_left.mpg and teabox_right.mpg that are the default videos and that could be changed using --name command line option. They need to be synchronized and correspond to the images acquired by a left and right camera.teabox_left.xml and teabox_right.xml that contain the tracker settings and camera parameters. See Settings from an XML file to know more about the content of these files. When using different cameras, since the intrinsic camera parameters differ the content of these *.xml may be different. There is also the possibility to set these parameters in the code without using an xml file; see Moving-edges settings and Keypoints settings.teabox_right.cao and teabox_left.cao. As this is the case here, the CAD models are generally the same when using a stereo configuration. See Tracker CAD model section to learn how the teabox is modeled and section CAD model in cao format to learn how to model an other object.*.init that contain the 3D coordinates of some points used to compute an initial pose which serves to initialize the tracker. The user has than to click in the left and right images on the corresponding 2D points. The default files are named teabox_left.init and teabox_left.right. In our case they have the same content, but depending on the point of view, sometime it could be useful that they differ to ensure that the 3D points are visible during initialization. The content of these files are detailed in Source code explained section.*.ppm that may help the user to remember the location of the corresponding 3D points specified in *.init file. By default we use teabox_left.ppm and teabox_right.ppm.cRightMcLeft.txt file that express the transformation between the right camera frame and the left camera frame. Using this transformation we can compute the 3D coordinates of a point in left camera frame in the right camera frame.As an output the tracker provides the two poses ![]() corresponding to a 4 by 4 matrix that corresponds to the geometric transformation between the frame attached to the object (in our case the tea box) and the frame attached to the left camera and the one attached to the right camera. The poses are returned as a vpHomogeneousMatrix container.

corresponding to a 4 by 4 matrix that corresponds to the geometric transformation between the frame attached to the object (in our case the tea box) and the frame attached to the left camera and the one attached to the right camera. The poses are returned as a vpHomogeneousMatrix container.

The following example comes from tutorial-mb-generic-tracker-stereo.cpp and allows to track a tea box modeled in cao format. In this example we consider a stereo configuration with images from a left camera and images from a right camera.

Once built, to choose which tracker to use on each camera, run the binary with the following argument:

For example, to use moving edges features on images acquired by the left camera and an hybrid scheme on images acquired by the right camera, run:

The source code is the following:

The previous source code shows how to use a model-based tracking on stereo images using the standard procedure to configure the tracker:

*.xml and *.init) for each camera view*.cao) for each camera viewBelow we give an explanation of the source code.

First the vpMbGenericTracker header is included:

We declare two images for the left and right camera views.

To construct a stereo tracker, we have to specify for each tracker which are the features to be considered as argument given to the tracker constructors. That is why whe create a vector of size 2 that contains the required vpMbGenericTracker::vpTrackerType:

All the configuration parameters for the tracker are stored in xml configuration files. To load the different files that contain also the intrinsic camera parameters, we use:

To load the 3D object model, we use:

We can also use the following setting that enables the display of the features used during the tracking:

We have to set the transformation matrices between the cameras and the reference camera to be able to compute the control law in a reference camera frame. In the code we consider the left camera with the name "Camera1" as the reference camera. For the right camera with the name "Camera2" we have to set the transformation ( ![]() ). This transformation is read from

). This transformation is read from cRightMcLeft.txt file. Since our left and right cameras are not moving, this transformation is constant and has not to be updated in the tracking loop:

The initial pose is set by clicking on specific points in the image:

The tracking is done by:

The poses for each camera are retrieved with:

To display the model with the estimated pose, we use:

The principle remains the same than with static cameras. You have to supply the camera transformation matrices to the tracker each time the cameras move and before calling the track method:

This information can be available through the robot kinematics or using different kind of sensors.

The following video shows the stereo hybrid model-based tracking based on object edges and KLT features located on visible faces. The result of the tracking is then used to servo the Romeo humanoid robot eyes to gaze toward the object. The images were captured by cameras located in the Romeo eyes.

You are now ready to see the next Tutorial: Markerless generic model-based tracking using a RGB-D camera.