|

Visual Servoing Platform

version 3.6.1 under development (2025-02-01)

|

|

Visual Servoing Platform

version 3.6.1 under development (2025-02-01)

|

This tutorial explains how to do an image-based servoing with a Parrot Bebop 2 drone on Ubuntu or OSX.

The following material is necessary :

ViSP must be built with OpenCV support if you want to get the video streamed by the drone, which needs to be decoded.

In order to use Parrot Bebop 2 drone with ViSP, you first need to build Parrot's SDK ARDroneSDK3 (as explained here) :

The following steps allow to build ARSDK3 on Ubuntu (tested on 18.04).

1. Get the SDK source code

Create a workspace.

$ cd ${VISP_WS}

$ mkdir -p 3rdparty/ARDroneSDK3 && cd 3rdparty/ARDroneSDK3

Initialize the repo.

$ sudo apt install repo $ repo init -u https://github.com/Parrot-Developers/arsdk_manifests.git -m release.xml

You can then download all the repositories automatically, by executing the following command.

$ repo sync

2. Build the SDK

Install required 3rd parties:

$ sudo apt-get install git build-essential autoconf libtool libavahi-client-dev \ libavcodec-dev libavformat-dev libswscale-dev libncurses5-dev mplayer

Build the SDK:

$ ./build.sh -p arsdk-native -t build-sdk -j

The output will be located in ${VISP_WS}/3rdparty/ARDroneSDK3/out/arsdk-native/staging/usr

/bin/bash: line 1: python: command not found make: *** [$VISP_WS/3rdparty/ARDroneSDK3/build/alchemy/main.mk:306: $VISP_WS/3rdparty/ARDroneSDK3/out/arsdk-native/build/libARMavlink/parrot.xml.done] Error 127 MAKE ERROR DETECTED [E] Task 'build-sdk' failed (Command failed (returncode=254))you need to install the following package:

$ sudo apt-get install python-is-python3

File "$VISP_WS/3rdparty/ARDroneSDK3/out/arsdk-native/staging-host/usr/lib/mavgen/pymavlink/generator/mavcrc.py", line 28, in accumulate_str

bytes.fromstring(buf)

AttributeError: 'array.array' object has no attribute 'fromstring'

you may edit $VISP_WS/3rdparty/ARDroneSDK3/out/arsdk-native/staging-host/usr/lib/mavgen/pymavlink/generator/mavcrc.py and modify accumulate_str() replacing 3. Set ARSDK_DIR environment variable

In order for ViSP to find ARDroneSDK3, set ARSDK_DIR environment variable:

$ export ARSDK_DIR=${VISP_WS}/3rdparty/ARDroneSDK3

4. Modify LD_LIBRARY_PATH environment variable to detect ARDroneSDK3 libraries

In order that ViSP binaries are able to find ARDroneSDK3 libraries, set LD_LIBRARY_PATH with:

$ export LD_LIBRARY_PATH=${LD_LIBRARY_PATH}:${ARSDK_DIR}/out/arsdk-native/staging/usr/lib

The following steps allow to build ARSDK3 on macOS Mojave 10.14.5.

1. Get the SDK source code

Create a workspace.

$ cd ${VISP_WS}

$ mkdir -p 3rdparty/ARDroneSDK3 && cd 3rdparty/ARDroneSDK3

Initialize the repo.

$ brew install repo $ repo init -u https://github.com/Parrot-Developers/arsdk_manifests.git -m release.xml

You can then download all the repositories automatically, by executing the following command.

$ repo sync

2. Build the SDK

Install required 3rd parties:

$ brew install ffmpeg

Build the SDK:

$ ./build.sh -p arsdk-native -t build-sdk -j

The output will be located in ${VISP_WS}/3rdparty/ARDroneSDK3/out/arsdk-native/staging/usr

3. Set ARSDK_DIR environment variable

In order for ViSP to find ARDroneSDK3, set ARSDK_DIR environment variable:

$ export ARSDK_DIR=${VISP_WS}/3rdparty/ARDroneSDK3

4. Modify DYLD_LIBRARY_PATH environment variable to detect ARDroneSDK3 libraries

In order that ViSP binaries are able to find ARDroneSDK3 libraries, set DYLD_LIBRARY_PATH with:

$ export DYLD_LIBRARY_PATH=${DYLD_LIBRARY_PATH}:${ARSDK_DIR}/out/arsdk-native/staging/usr/lib

In order that ViSP takes into account ARSDK3 fresh installation you need to configure and build ViSP again.

$ cd $VISP_WS/visp-build

$ cmake ../vispAt this point you should see in

Real robots section that ARSDK and ffmpeg are enabled Real robots:

...

Use Parrot ARSDK: yes

\-Use ffmpeg: yes

...

$ make -j4

An example of image-based visual servoing is implemented in servoBebop2.cpp.

The corresponding source code and CMakeLists.txt file can be found in https://github.com/lagadic/visp/tree/master/example/servo-bebop2.

First, to get the basics of image-based visual servoing, you can read Tutorial: Image-based visual servo.

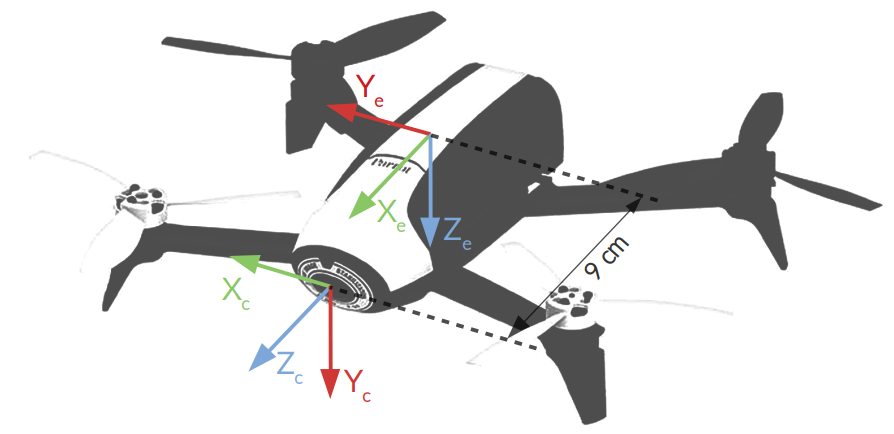

The following image shows the frames attached to the drone:

In servoBebop2.cpp example, we use four visual features ![]() for the servoing in order to control the four drone dof

for the servoing in order to control the four drone dof ![]() . These visual features are:

. These visual features are:

The corresponding controller is given by:

![]()

where:

To make the relation between this controller description and the code, check the comments in servoBebop2.cpp.

The next step is now to run the image-based visual servoing example implemented in servoBebop2.cpp.

If you built ViSP with ffmpeg and Parrot ARSDK3 support, the corresponding binary is available in ${VISP_WS}/visp-build/example/servo-bebop2 folder.

$ cd ${VISP_WS}/visp-build/example/servo-bebop2

$ ./servoBebop2 --tag_size 0.14

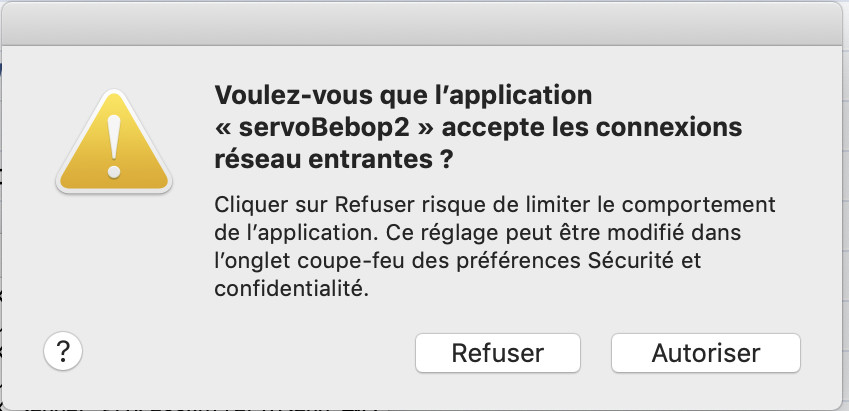

On Mac OSX, you may need to allow servoBebop2 to accept incoming network connections :

Running the previous command should allow to get same results as the one presented in the video:

Run ./servoBebop2 --help to see which are the command line options available.

--ip allows you to specify the ip of the drone on the network (default is 192.168.42.1). This is useful if you changed your drone ip (see Changing Bebop 2 IP address), if you want to fly multiple drones at once, for instance.--distance_to_tag 1.5 allows to specify the desired distance (in meters) to the tag for the drone servoing. Values between 0.5 and 2 are recommended (default is 1 meter).--intrinsic ~/path-to-calibration-file/camera.xml allows you to specify the intrinsic camera calibration parameters. This file can be obtained by completing Tutorial: Camera intrinsic calibration. Without this option, default parameters that are enough for a trial will be used..--hd_stream enables HD 720p stream resolution instead of default 480p. Increase range and accuracy of the tag detection, but increases latency and computation time. --verbose or -v enables the display of information messages from the drone, and the velocity commands sent to the drone.The program will first connect to the drone, start the video streaming and decoding, and then the drone will take off and hover until it detects one (and one only) 36h11 AprilTag in the image.

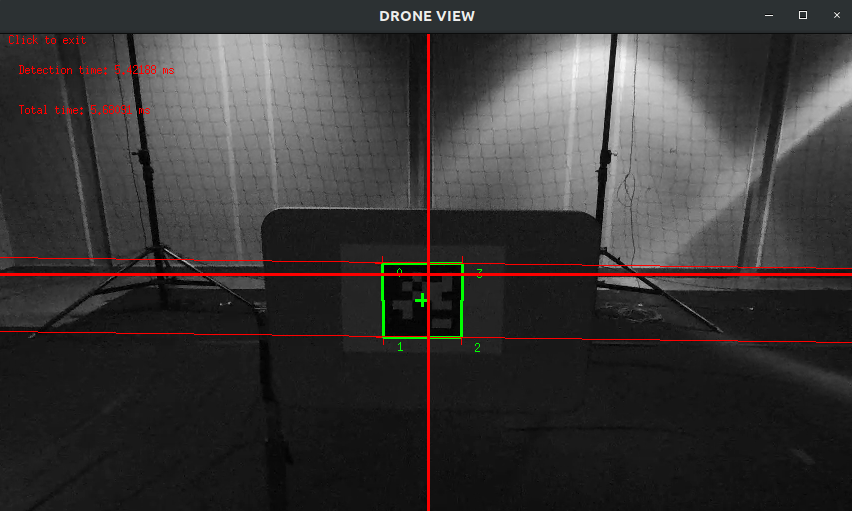

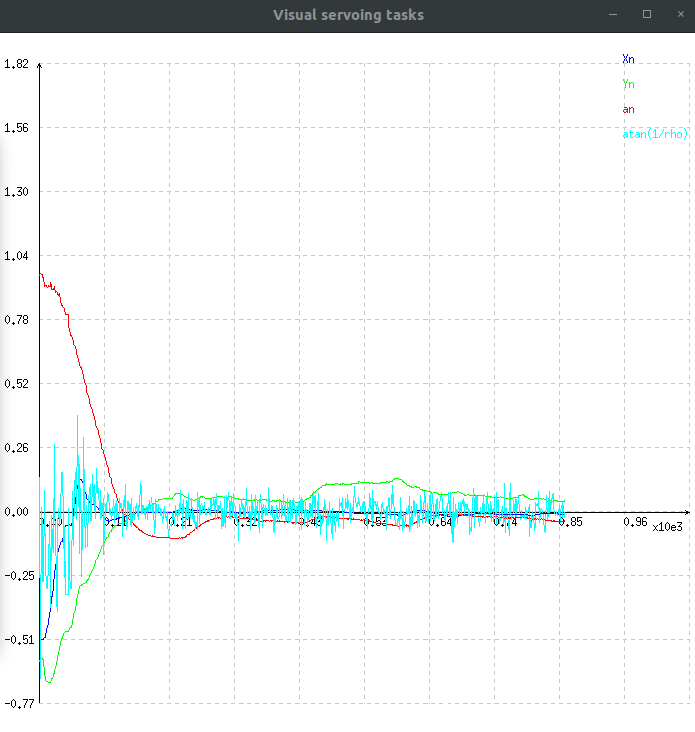

We then display the drone video stream with the visible features, as well as the error for each feature :

In this graph :

Clicking on the drone view display will make the drone land, safely disconnect everything and quit the program.

If you need to change the drone IP address, for flying multiple drones for instance, you can follow these steps :

$ telnet 192.168.42.1

$ mount –o remount,rw /

/sbin/broadcom_setup.sh : $ cd sbin $ vi broadcom_setup.sh

VI text editor :i and escape to cancel,: and enter wq to save and quit, or q! to quit without saving.IFACE IP AP=”192.168.42.1” to IFACE IP AP=”192.168.x.1”, where x represents any number that you have not assigned to any other drone yet.exit.If you want to control multiple drones using one single computer, you're going to need to change the drones ip, by following Changing Bebop 2 IP address.

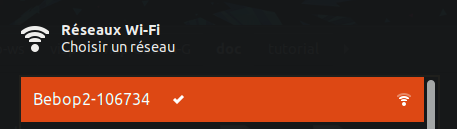

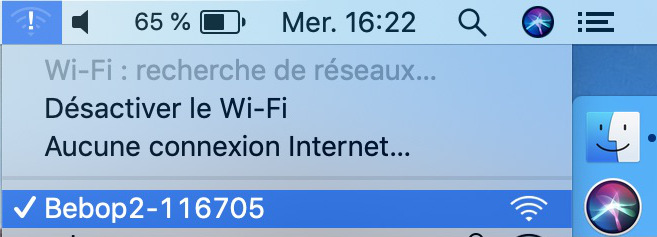

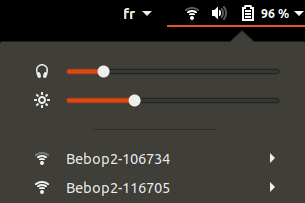

Once every drone you want to use has a unique IP address, you need to connect your PC to each drone WiFi network. You can use multiple WiFi dongles and you PC WiFi card, if it has one.

For two drones, it should look like this (on Ubuntu) :

In ViSP programs that use the drone, you can then use option --ip to specify the IP of the drone to which you want to connect :

$ cd ${VISP_WS}/visp-build/example/servo-bebop2

$ ./keyboardControlBebop2.cpp --ip 192.168.42.1

and in another terminal :

$ cd ${VISP_WS}/visp-build/example/servo-bebop2

$ ./keyboardControlBebop2.cpp --ip 192.168.43.1

In your own programs, you can specify the IP in the constructor of vpRobotBebop2 class :

vpRobotBebop2 drone(false, true, "192.168.43.1"); // This creates the drone with low verbose level, settings reset and corresponding IP

If needed, you can see Tutorial: Image frame grabbing corresponding section dedicated to Parrot Bebop 2 to get images of the calibration grid.

You can also calibrate your drone camera and generate an XML file usable in the servoing program (see Tutorial: Camera intrinsic calibration).

If you need more details about this program, check the comments in servoBebop2.cpp.

You can check example program keyboardControlBebop2.cpp if you want to see how to control a Bebop 2 drone with the keyboard.

You can also check vpRobotBebop2 to see the full documentation of the Bebop 2 ViSP class.

The same kind of tutorial can be achieved following the Tutorial: Image-based visual-servoing on a drone equipped with a Pixhawk.

Finally, if you are more interested to do the same experiment with ROS framework, you can follow [How to do visual servoing with Parrot Bebop 2 drone using visp_ros tutorial.