|

Visual Servoing Platform

version 3.0.0

|

|

Visual Servoing Platform

version 3.0.0

|

This tutorial focuses on pose estimation from planar or non planar points. From their 2D coordinates in the image plane, and their corresponding 3D coordinates specified in an object frame, ViSP is able to estimate the relative pose between the camera and the object frame. This pose is returned as an homogeneous matrix cMo. Note that to estimate a pose at least four points need to be considered.

In this tutorial we assume that you are familiar with ViSP blob tracking. If not, see Tutorial: Blob tracking.

In this section we consider the case of four planar points. An image processing done by our blob tracker allows to extract the pixel coordinates of each blob center of gravity. Then using the camera intrinsic parameters we convert these pixel coordinates in meters in the image plane. Knowing the 3D coordinates of the points we then estimate the pose of the object.

The corresponding source code also provided in tutorial-pose-from-points-image.cpp is the following.

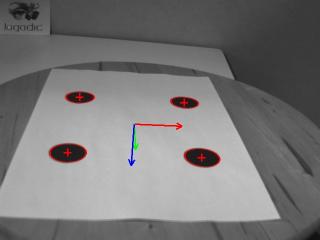

Here is a screen shot of the resulting program:

And here is the detailed explanation of the new lines introduced in the source code.

First we include the headers of the vpPixelMeterConversion class that contains static functions able to convert coordinates of a point expressed in pixels in the image into meter in the image plane thanks to the camera intrinsic parameters. We also include the header of the vpPose class that is able to estimate a pose from points.

Here we introduce the computePose() function that does the pose estimation. This function uses as input two vectors. The first one is a vpPoint that contains the 3D coordinates in meters of a point, while the second one is a vpDot2 that contains the 2D coordinates in pixels of a blob center of gravity. The 3D and 2D coordinates of the points need to be matched. That means that point[i] and dot[i] should refer to the same physical point. Other inputs are cam that corresponds to the camera intrinsic parameters, and init that indicates if the pose needs to be initialized the first time. We have cMo parameter that will contain the resulting pose estimated from the input points.

In the computePose() function, we create first an instance of the vpPose class. Then for each point we get its 2D coordinates in pixels as the center of gravity of a blob, convert them in 2D coordinates (x,y) in meter in the image plane using vpPixelMeterConversion::convertPoint(). We update then the point instance with the corresponding 2D coordinates and add this point to the vpPose class. Once all the points are added, we can estimate the pose. If init is true, we estimate a first pose using vpPose::DEMENTHON linear approach. It produces a pose used as initialization for the vpPose::VIRTUAL_VS (virtual visual servoing) non linear approach that will converge to the solution with a lower residue than the linear approach. If init is false, we consider that the previous pose cMo is near the solution so that it can be uses as initialization for the non linear visual servoing minimization.

In the main() function, instead of using an image sequence of the object, we consider always the same image "square.pgm". In this image we consider a 12cm by 12 cm square. The corners are represented by a blob. Their center of gravity correspond to a corner position. This gray level image is read thanks to:

After the instantiation of a window able to display the image, and at the end the result of the estimated pose, we setup the camera parameters. We consider here the following camera model  where

where  are the ratio between the focal length and the size of a pixel, and where the principal point

are the ratio between the focal length and the size of a pixel, and where the principal point  is located at the image center position.

is located at the image center position.

Each blob is then tracked using a vector of four vpDot2 trackers. To avoid human interaction, we initialize the tracker with a pixel position inside each blob.

We also define the model of our 12cm by 12cm square by setting the 3D coordinates of the corners in an object frame located in this example in the middle of the square. We consider here that the points are located in the plane  .

.

Next, we created an homogeneous matrix cMo that will contain the estimated pose.

In the infinite loop, at each iteration we read a new image (in this example it remains the same), display its content in the window, and track each blob.

Then we call the pose estimation function computePose() presented previously. It uses the 3D coordinates of the points defined as our model in the object frame, and their corresponding 2D positions in the image obtained by the blob tracker to estimate the pose cMo. This homogeneous transformation gives the position of the object frame in the camera frame.

The resulting pose is displayed as an RGB frame in the window overlay. Red, green and blue colors are for x, y and z axis respectively. Each axis is 0.05 meter long. All the drawings are then flushed to update the window content.

At the end of the first iteration, we turn off the init flag that indicates that the next pose estimation could use our non linear vpPose::VIRTUAL_VS estimation method with the previous pose as initial value.

Finally, we interrupt the infinite loop by a user mouse click in the window.

We also introduce a 40 milliseconds sleep to slow down the loop and relax the CPU.

We provide also an other example in tutorial-pose-from-points-tracking.cpp that shows how to estimate the pose from a tracking performed on images acquired by a firewire camera.