|

Visual Servoing Platform

version 3.6.1 under development (2025-01-21)

|

|

Visual Servoing Platform

version 3.6.1 under development (2025-01-21)

|

#include <visp3/dnn_tracker/vpMegaPose.h>

Public Types | |

| enum | ServerMessage { UNKNOWN = 0 , ERR = 1 , OK = 2 , GET_POSE = 3 , RET_POSE = 4 , GET_VIZ = 5 , RET_VIZ = 6 , SET_INTR = 7 , GET_SCORE = 8 , RET_SCORE = 9 , SET_SO3_GRID_SIZE = 10 , GET_LIST_OBJECTS = 11 , RET_LIST_OBJECTS = 12 , EXIT = 13 } |

Public Member Functions | |

| vpMegaPose (const std::string &host, int port, const vpCameraParameters &cam, unsigned height, unsigned width) | |

| std::vector< vpMegaPoseEstimate > | estimatePoses (const vpImage< vpRGBa > &image, const std::vector< std::string > &objectNames, const vpImage< uint16_t > *const depth=nullptr, const double depthToM=0.f, const std::vector< vpRect > *const detections=nullptr, const std::vector< vpHomogeneousMatrix > *const initial_cTos=nullptr, int refinerIterations=-1) |

| std::vector< double > | scorePoses (const vpImage< vpRGBa > &image, const std::vector< std::string > &objectNames, const std::vector< vpHomogeneousMatrix > &cTos) |

| void | setIntrinsics (const vpCameraParameters &cam, unsigned height, unsigned width) |

| vpImage< vpRGBa > | viewObjects (const std::vector< std::string > &objectNames, const std::vector< vpHomogeneousMatrix > &poses, const std::string &viewType) |

| void | setCoarseNumSamples (const unsigned num) |

| std::vector< std::string > | getObjectNames () |

| ~vpMegaPose () | |

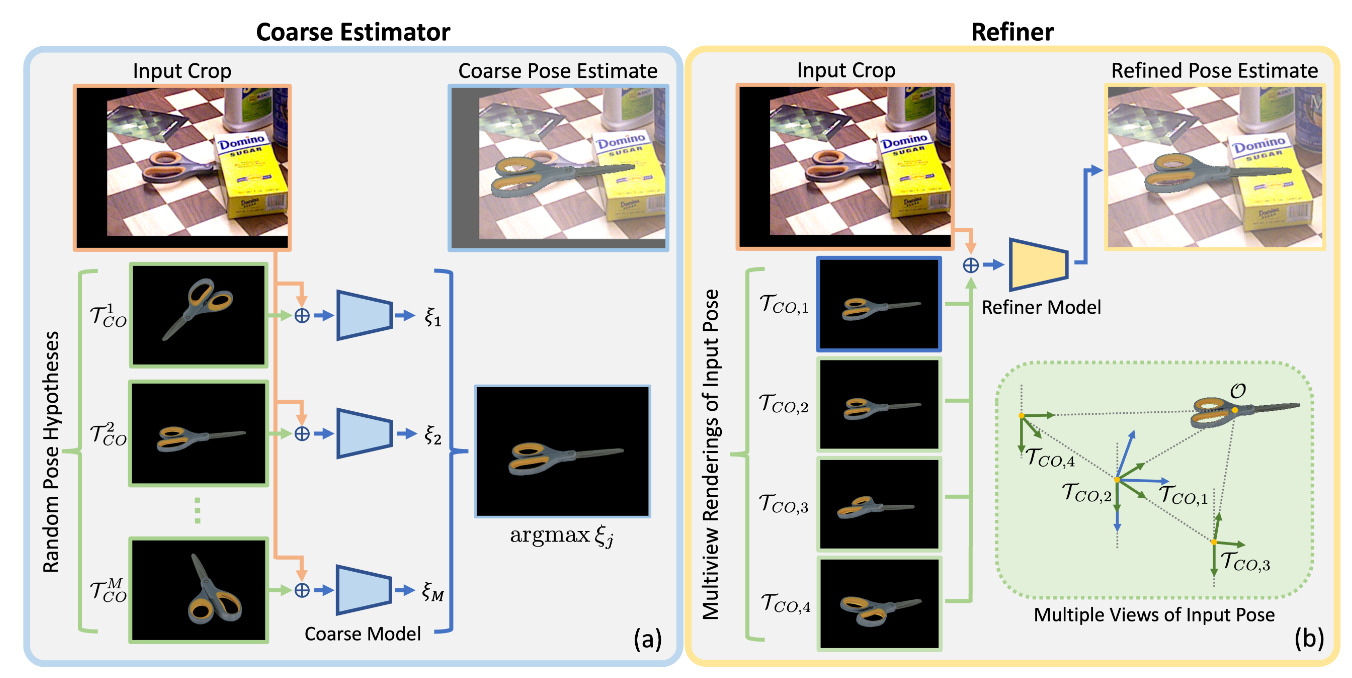

Class to communicate with a MegaPose server. MegaPose is a deep learning-based method to estimate the 6D pose of novel objects, meaning that it does not require training for a specific object. MegaPose is a multistage method and can be used either as a full pose estimation algorithm or as a tracker. MegaPose works by render and compare: a synthetized view of an object is compared with a real-world image to see if the poses match. Behind the scene, there are two main models:

This can be best visualized in the figure below (taken from [25]):

For more information on how the model works, see The MegaPose Github page or the paper [25].

For instructions on how to install the Python server and an example usage, see Tutorial: Tracking with MegaPose.

Definition at line 137 of file vpMegaPose.h.

Enum to communicate with python server. Used to map to a string, which the client and server check to see what message they are getting/expecting.

Definition at line 144 of file vpMegaPose.h.

| vpMegaPose::vpMegaPose | ( | const std::string & | host, |

| int | port, | ||

| const vpCameraParameters & | cam, | ||

| unsigned | height, | ||

| unsigned | width | ||

| ) |

Instantiates a connection to a MegaPose server. The server should already be started and listening at host:port.

| [in] | host | : The host to connect to (IP address). |

| [in] | port | : The port on which the server is listening for incoming connections. |

| [in] | cam | : Intrinsics of the camera with which the images sent to the MegaPose server are acquired. |

| [in] | height | : Height of the images sent to the server. |

| [in] | width | : Width of the images sent to the server. |

Definition at line 370 of file vpMegaPose.cpp.

References vpException::badValue, vpException::ioError, and setIntrinsics().

| vpMegaPose::~vpMegaPose | ( | ) |

Definition at line 399 of file vpMegaPose.cpp.

| std::vector< vpMegaPoseEstimate > vpMegaPose::estimatePoses | ( | const vpImage< vpRGBa > & | image, |

| const std::vector< std::string > & | objectNames, | ||

| const vpImage< uint16_t > *const | depth = nullptr, |

||

| const double | depthToM = 0.f, |

||

| const std::vector< vpRect > *const | detections = nullptr, |

||

| const std::vector< vpHomogeneousMatrix > *const | initial_cTos = nullptr, |

||

| int | refinerIterations = -1 |

||

| ) |

Estimate the poses of objects (in the frame of the camera c) with MegaPose. The object origins used to estimate the poses are those used by the MegaPose server.

| [in] | image | : The image, acquired by camera c, used to estimate the pose of the objects. |

| [in] | objectNames | : Names of the objects for which to estimate the pose. The name of the object should be known by the MegaPose server. An object name can appear multiple times if multiple instances of the object are in the image and their pose should estimated. |

| [in] | depth | : An optional depth image, that must be aligned with the RGB image. If provided, the MegaPose server should be configure to use the depth. Note that the using depth may lead to a noisy estimation and should not always be preferred. |

| [in] | depthToM | : A scale factor that is used to convert the depth map into meters. If depth is null, the value is ignored. |

| [in] | detections | : The bounding boxes of the objects objectNames. Used only for the coarse model, which uses the size of the bounding box to generate initial guesses. If specified, should be the same size as objectNames. The bounding box at index i will be for the object i. |

| [in] | initial_cTos | : An optional initial pose estimate for each object present in the image. If not null, then the MegaPose server only runs the refiner model, which is faster but only allows for smaller corrections (depending on the number of refiner iterations). If specified, should be the same size as objectNames. The initial pose estimate at index i will be for the object at index i. |

| [in] | refinerIterations | : Number of MegaPose refiner iterations to be performed. |

Definition at line 413 of file vpMegaPose.cpp.

References vpException::badValue.

| std::vector< std::string > vpMegaPose::getObjectNames | ( | ) |

Query the server to find the name of all of the objects it knows.

Definition at line 601 of file vpMegaPose.cpp.

| std::vector< double > vpMegaPose::scorePoses | ( | const vpImage< vpRGBa > & | image, |

| const std::vector< std::string > & | objectNames, | ||

| const std::vector< vpHomogeneousMatrix > & | cTos | ||

| ) |

Score the input poses with MegaPose. The score, between 0 and 1, indicates whether the refiner model can converge to the correct pose. As such, it should mainly be used to detect divergence, and not the quality of the pose estimate.

| [in] | image | : The input RGB image in which the objects are located. |

| [in] | objectNames | : The detected objects. Names should be known by the MegaPose server. |

| [in] | cTos | : The object poses to be scored. |

Definition at line 489 of file vpMegaPose.cpp.

| void vpMegaPose::setCoarseNumSamples | ( | const unsigned | num | ) |

Set the number of renders used for coarse pose estimation by MegaPose.

| [in] | num | : The number of renders for full pose estimation by MegaPose. This number should be equal to 72, 512, 576 or 4608. |

Definition at line 584 of file vpMegaPose.cpp.

| void vpMegaPose::setIntrinsics | ( | const vpCameraParameters & | cam, |

| unsigned | height, | ||

| unsigned | width | ||

| ) |

Set the camera parameters for the MegaPose server.

| [in] | cam | : The camera intrinsics. |

| [in] | height | : The incoming images' height. |

| [in] | width | : The incoming images' width. Note that the height and width should be equal to the width of the images used in estimatePoses or scorePoses. Otherwise an exception will be thrown. |

Definition at line 528 of file vpMegaPose.cpp.

References vpCameraParameters::get_px(), vpCameraParameters::get_py(), vpCameraParameters::get_u0(), and vpCameraParameters::get_v0().

Referenced by vpMegaPose().

| vpImage< vpRGBa > vpMegaPose::viewObjects | ( | const std::vector< std::string > & | objectNames, |

| const std::vector< vpHomogeneousMatrix > & | poses, | ||

| const std::string & | viewType | ||

| ) |

Definition at line 553 of file vpMegaPose.cpp.