|

Visual Servoing Platform

version 3.6.1 under development (2024-11-15)

|

|

Visual Servoing Platform

version 3.6.1 under development (2024-11-15)

|

This tutorial explains how to do an image-based visual-servoing with the Panda 7-dof robot from Franka Emika equipped with an Intel Realsense SR300 camera. It follows Tutorial: PBVS with Panda 7-dof robot from Franka Emika that explains also how to setup the robot.

At this point we suppose that you follow all the Prerequisites given in Tutorial: PBVS with Panda 7-dof robot from Franka Emika.

An example of image-based visual servoing using Panda robot equipped with a Realsense camera is available in servoFrankaIBVS.cpp.

apps/calibration/eMc.yaml.Now enter in example/servo-franka folder and run servoFrankaIBVS binary using --eMc to locate the file containing the ![]() transformation. Other options are available. Using

transformation. Other options are available. Using --help show them:

$ cd example/servo-franka $ ./servoFrankaIBVS --help ./servoFrankaIBVS [--ip <default 192.168.1.1>] [--tag_size <marker size in meter; default 0.12>] [--eMc <eMc extrinsic file>] [--quad_decimate <decimation; default 2>] [--adaptive_gain] [--plot] [--task_sequencing] [--no-convergence-threshold] [--verbose] [--help] [-h]

Run the binary activating the plot and using a constant gain:

$ ./servoFrankaIBVS --eMc ../../apps/calibration/eMc.yaml --plot

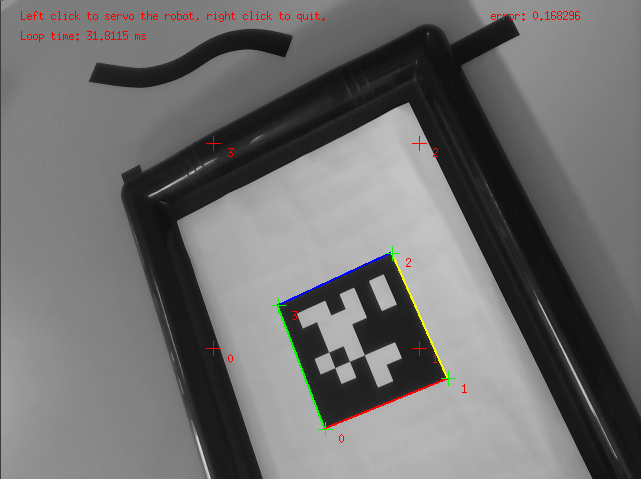

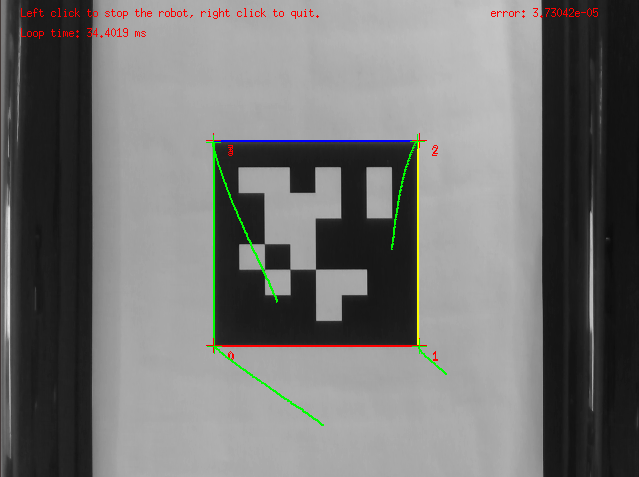

Use the left mouse click to enable the robot controller, and the right click to quit the binary.

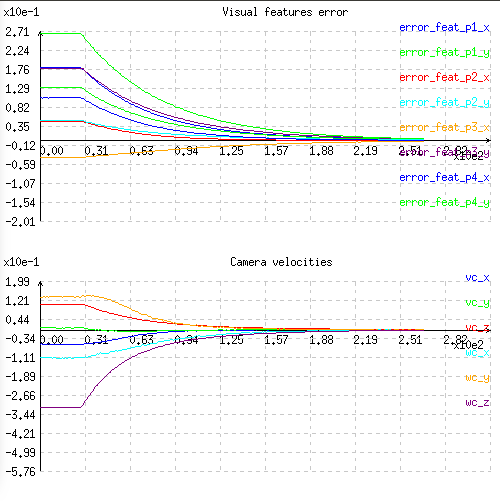

At this point the behaviour that you should observe is the following:

You can also activate an adaptive gain that will make the convergence faster:

$ ./servoFrankaIBVS --eMc ../../apps/calibration/eMc.yaml --plot --adaptive_gain

You can also start the robot with a zero velocity at the beginning introducing task sequencing option:

$ ./servoFrankaIBVS --eMc ../../apps/calibration/eMc.yaml --plot --task_sequencing

And finally you can activate the adaptive gain and task sequencing:

$ ./servoFrankaIBVS --eMc ../../apps/calibration/eMc.yaml --plot --adaptive_gain --task_sequencing

To learn more about adaptive gain and task sequencing see Tutorial: How to boost your visual servo control law.

If you want to achieve a physical simulation of a Franka robot, with a model that has been accurately identified from a real Franka robot, like in the next video, we recommend to make a tour on Tutorial: FrankaSim a Panda 7-dof robot from Franka Emika simulator that is available in visp_ros. Here you will find a ROS package that allows to implement position, velocity and impedance control of a simulated Franka robot using ROS and CoppeliaSim.

You can also follow Tutorial: Image-based visual servo that will give some hints on image-based visual servoing in simulation with a free flying camera.