|

Visual Servoing Platform

version 3.3.0 under development (2020-02-17)

|

|

Visual Servoing Platform

version 3.3.0 under development (2020-02-17)

|

This tutorial explains how to do a position-based visual-servoing with the Panda 7-dof robot from Franka Emika equipped with an Intel Realsense SR300 camera.

The following video shows the resulting robot trajectory when the robot is achieving a position-based visual servoing over an Apriltag target.

We suppose here that you have:

In order to control your robot using libfranka, the controller program on the workstation PC must run with real-time priority under a PREEMPT_RT kernel. This tutorial shows how to proceed. We recall here the steps:

First, install the necessary dependencies:

$ sudo apt-get install build-essential bc curl ca-certificates fakeroot gnupg2 libssl-dev lsb-release

Then, you have to decide which kernel version to use. We recommend to choose the same as in the tutorial. At the time it was written, it was 4.14.12 and 4.14.12-rt10. If you choose a different version, simply substitute the numbers. Having decided on a version, use curl to download the source files:

$ cd ~/visp_ws $ mkdir rt-linux; cd rt-linux $ curl -SLO https://www.kernel.org/pub/linux/kernel/v4.x/linux-4.14.12.tar.xz $ curl -SLO https://www.kernel.org/pub/linux/kernel/projects/rt/4.14/older/patch-4.14.12-rt10.patch.xz

And decompress them with:

$ xz -d linux-4.14.12.tar.xz $ xz -d patch-4.14.12-rt10.patch.xz

Compiling the kernel**

Once you are sure the files were downloaded properly, you can extract the source code and apply the patch:

$ tar xf linux-4.14.12.tar $ cd linux-4.14.12 $ patch -p1 < ../patch-4.14.12-rt10.patch

The next step is to configure your kernel:

$ make oldconfig

This opens a text-based configuration menu. When asked for the Preemption Model, choose the Fully Preemptible Kernel:

We recommend keeping all options at their default values. Afterwards, you are ready to compile the kernel. As this is a lengthy process, set the multi threading option -j to the number of your CPU cores:

$ fakeroot make -j4 deb-pkg

Finally, you are ready to install the newly created package. The exact names depend on your environment, but you are looking for headers and images packages without the dbg suffix. To install:

$ sudo dpkg -i ../linux-headers-4.14.12-rt10_*.deb ../linux-image-4.14.12-rt10_*.deb

Reboot the computer

$ sudo reboot

The version of the kernel is now 4.14.12

$ uname -msr Linux 4.14.12 x86_64

Allow a user to set real-time permissions for its processes**

After the PREEMPT_RT kernel is installed and running, add a group named realtime and add the user controlling your robot to this group:

$ sudo addgroup realtime $ sudo usermod -a -G realtime $(whoami)

Afterwards, add the following limits to the realtime group in /etc/security/limits.conf:

@realtime soft rtprio 99 @realtime soft priority 99 @realtime soft memlock 102400 @realtime hard rtprio 99 @realtime hard priority 99 @realtime hard memlock 102400

The limits will be applied after you log out and in again.

As described here, to install the Franka library, follow the steps:

$ sudo apt install build-essential cmake git libpoco-dev libeigen3-dev $ cd ~/visp_ws $ git clone --recursive https://github.com/frankaemika/libfranka $ cd libfranka $ mkdir build $ cd build $ cmake .. -DCMAKE_BUILD_TYPE=Release $ make -j4 $ sudo make install

Following the tutorial, we recall the main steps here:

Get librealsense from github:

$ cd ~/visp_ws $ git clone https://github.com/IntelRealSense/librealsense.git $ cd librealsense

Video4Linux backend preparation:

1/ Ensure no Intel RealSense cameras are plugged in.

2/ Install openssl package required for kernel module build:

$ sudo apt-get install libssl-dev

Install udev rules located in librealsense source directory:

$ sudo cp config/99-realsense-libusb.rules /etc/udev/rules.d/ $ sudo udevadm control --reload-rules && udevadm trigger

Install the packages required for librealsense build:

$ sudo apt-get install libusb-1.0-0-dev pkg-config libgtk-3-dev $ sudo apt-get install libglfw3-dev

Build and install librealsense

$ mkdir build $ cd build $ cmake .. -DBUILD_EXAMPLES=ON -DCMAKE_BUILD_TYPE=Release $ make -j4 $ sudo make install

Connect your Realsense camera (we are using a SR300) and check if you are able to acquire images running:

$ ./examples/capture/rs-capture

If you are able to visualize the images, it means that you succeed in librealsense installation.

We provide a ready to print 36h11 tag that is 12 by 12 cm square [download] that you may print.

If you are interested to get other tags, follow the steps described in Print an AprilTag marker.

Follow the steps described in Tutorial: Camera extrinsic calibration in order to estimate the end-effector to camera transformation. This step is mandatory to control the robot in cartesian in the camera frame.

Since you installed new libfranka and librealsense 3rd parties, you need to configure again ViSP with cmake in order that ViSP is able to use these libraries. To this end follow Configure ViSP from source. At this step you should see new USE_FRANKA and USE_LIBREALSENSE2 cmake vars appearing in the CMake GUI.

Now follow the instructions for Build ViSP libraries.

Our robot controller has by default IP 192.168.1.1. Here we show how to configure a laptop that is connected with an Ethernet cable to the robot controller.

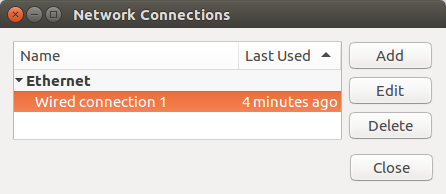

Edit Ethernet connections:

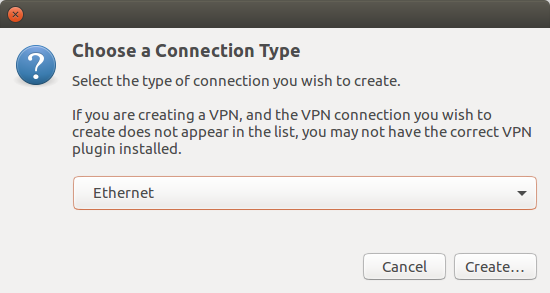

Add a new connexion using "Add" button. Choose the default Ethernet connection type:

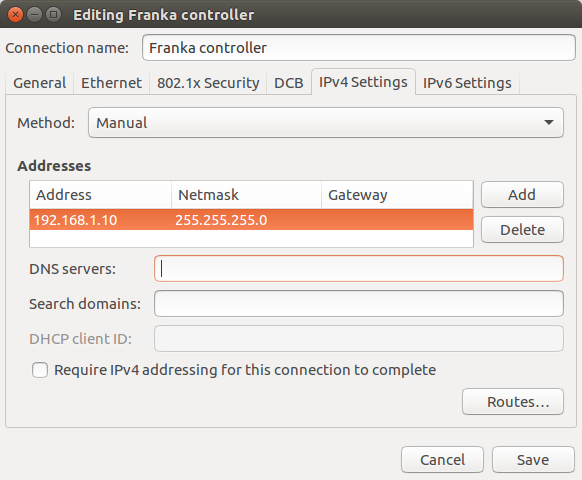

Click "Create" button in order to create a new Franka controller connection that has a static IPv4 like 192.168.1.10 and netmask 255.255.255.0:

Click "Save" button.

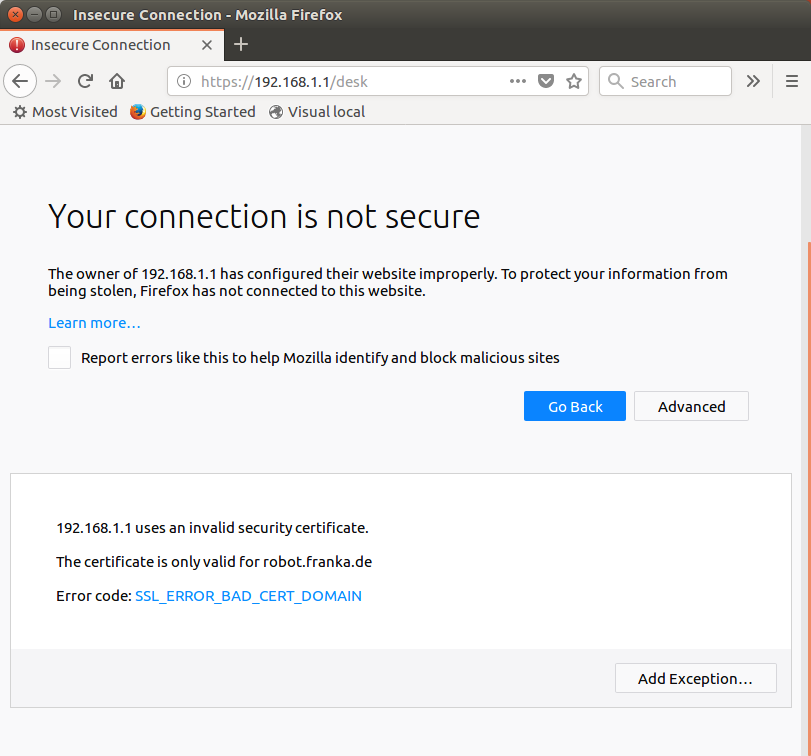

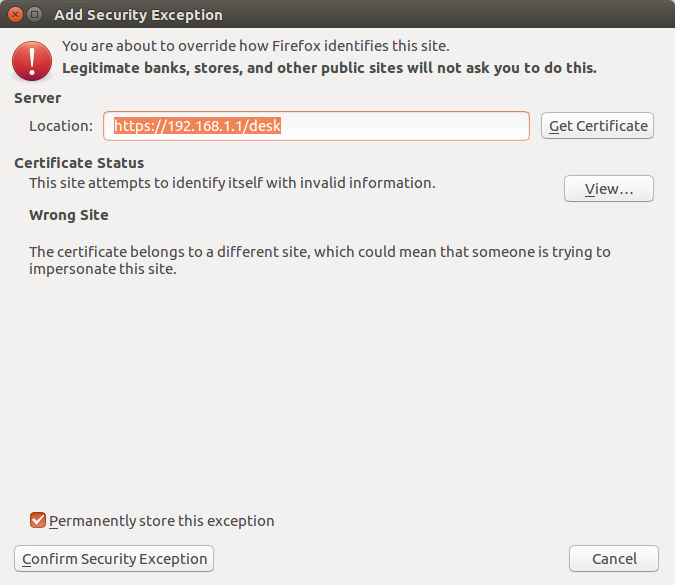

Select the new Ethernet Networks connection named "Franka controller". When the connection is established open a web browser like Firefox or Chromium and enter the address https://192.168.1.1/desk. The first time you will be warned that the connection is not secure. Click "Advanced" and "Add Exception":

Then confirm security exception

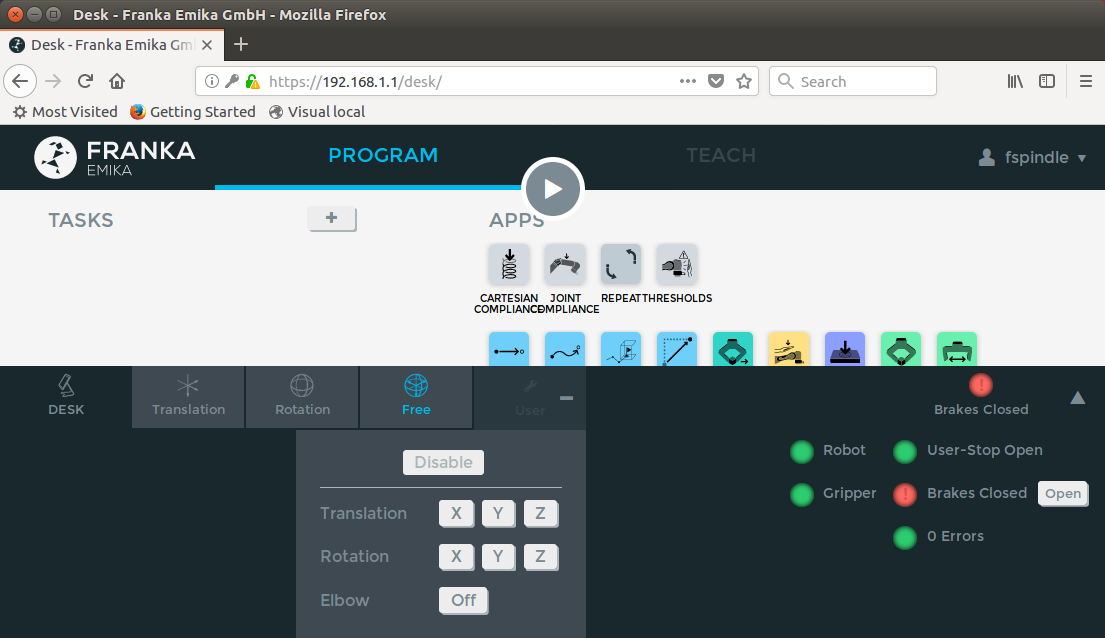

When connected, you may release the user-stop button and open brakes:

An example of position-based visual servoing using Panda robot equipped with a Realsense camera is available in servoFrankaPBVS.cpp.

the homogeneous transformation between robot end-effector and camera frame. We suppose here that the file is located in

the homogeneous transformation between robot end-effector and camera frame. We suppose here that the file is located in tutorial/calibration/eMc.yaml.Now enter in example/servo-franka folder and run servoFrankaPBVS binary using --eMc to locate the file containing the  transformation. Other options are available. Using

transformation. Other options are available. Using --help show them:

$ cd example/servo-franka $ ./servoFrankaPBVS --help ./servoFrankaPBVS [--ip <default 192.168.1.1>] [--tag_size <marker size in meter; default 0.12>] [--eMc <eMc extrinsic file>] [--quad_decimate <decimation; default 2>] [--adaptive_gain] [--plot] [--task_sequencing] [--no-convergence-threshold] [--verbose] [--help] [-h]

Run the binary activating the plot and using a constant gain:

$ ./servoFrankaPBVS --eMc ../../tutorial/calibration/eMc.yaml --plot

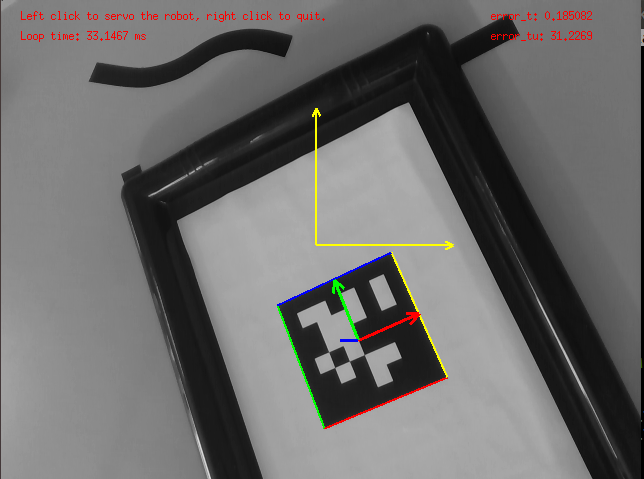

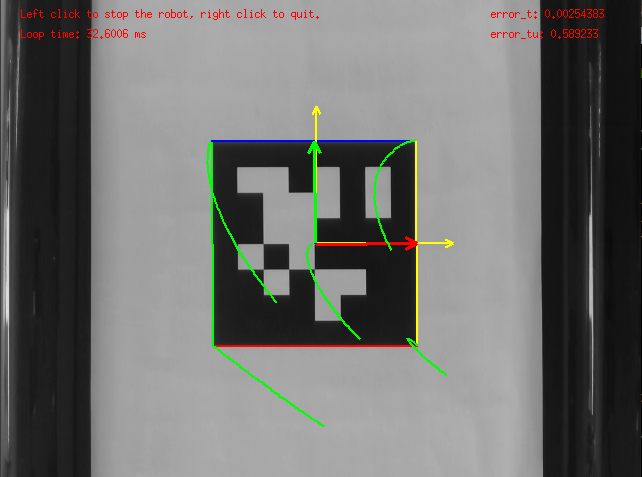

Use the left mouse click to enable the robot controller, and the right click to quit the binary.

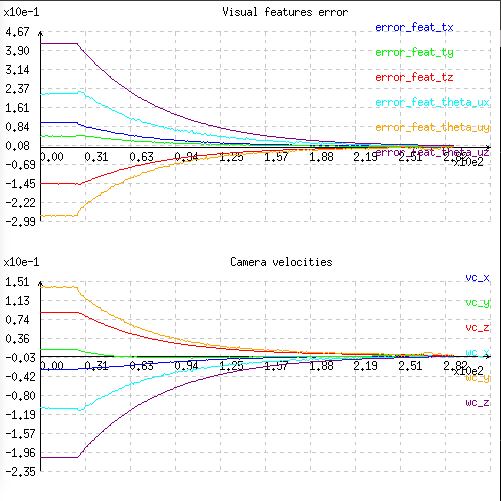

At this point the behaviour that you should observe is the following:

You can also activate an adaptive gain that will make the convergence faster:

$ ./servoFrankaPBVS --eMc ../../tutorial/calibration/eMc.yaml --plot --adaptive_gain

You can also start the robot with a zero velocity at the beginning introducing task sequencing option:

$ ./servoFrankaPBVS --eMc ../../tutorial/calibration/eMc.yaml --plot --task_sequencing

And finally you can activate the adaptive gain and task sequencing:

$ ./servoFrankaPBVS --eMc ../../tutorial/calibration/eMc.yaml --plot --adaptive_gain --task_sequencing

To learn more about adaptive gain and task sequencing see Tutorial: How to boost your visual servo control law.

You are now ready to see the Tutorial: IBVS with Panda 7-dof robot from Franka Emika that shows how to implement an image-based visual servoing scheme with the Franka robot. You may also follow Tutorial: Image-based visual servo that will give some hints on image-based visual servoing in simulation with a free flying camera.