Introduction

This tutorial shows how to detect one or more AprilTag marker with ViSP. To this end, we provide vpDetectorAprilTag class that is a wrapper over Apriltag 3rd party library. Notice that there is no need to install this 3rd party, since AprilTag source code is embedded in ViSP.

The vpDetectorAprilTag class inherits from vpDetectorBase class, a generic class dedicated to detection. For each detected tag, it allows retrieving some characteristics such as the tag id, and in the image, the polygon that contains the tag and corresponds to its 4 corner coordinates, the bounding box and the center of gravity of the tag.

Moreover, vpDetectorAprilTag class allows estimating the 3D pose of the tag. To this end, the camera parameters as well as the size of the tag are required.

In the next sections you will find examples that show how to detect tags in a single image or in images acquired from a camera connected to your computer.

Note that all the material (source code and image) described in this tutorial is part of ViSP source code and could be downloaded using the following command:

Print an AprilTag marker

We provide a ready to print 36h11 tag that is 9.5 by 9.5 cm square [download].

If you prefer, you can also directly download on the Apriltag website some pre-generated tag families:

In each archive you will find a PNG image of each tag, a mosaic in PNG containing every tag and a ready-to-print postscript file with one tag per page. If you want to print an individual tag, you can manually scale the corresponding PNG image using two methods:

- on Unix with ImageMagick, e.g.:

$ convert tag36_11_00000.png -scale 5000% tag36_11_00000_big.png

- or open the image with Gimp:

- then from the pulldown menu, select Image > Scale Image

- set the unit and the size

- set the Interpolation mode to None

- click on the Scale button

- From the pulldown menu, select File > Export As

- Save the image as a new PNG image, e.g.,

/tmp/tag36_11_00000-rescaled.png

- Send the PNG file to your printer

AprilTag detection and pose estimation (single image)

The following example also available in tutorial-apriltag-detector.cpp detects a tag on a single image.

#include <visp3/detection/vpDetectorAprilTag.h>

#include <visp3/gui/vpDisplayGDI.h>

#include <visp3/gui/vpDisplayOpenCV.h>

#include <visp3/gui/vpDisplayX.h>

#include <visp3/io/vpImageIo.h>

#ifdef VISP_HAVE_PUGIXML

#include <visp3/core/vpXmlParserCamera.h>

#endif

int main(int argc, const char **argv)

{

#if defined(VISP_HAVE_APRILTAG) && (defined(VISP_HAVE_X11) || defined(VISP_HAVE_GDI) || defined(VISP_HAVE_OPENCV))

std::string input_filename = "AprilTag.pgm";

double tagSize = 0.053;

float quad_decimate = 1.0;

int nThreads = 1;

std::string intrinsic_file = "";

std::string camera_name = "";

bool display_tag = false;

int color_id = -1;

unsigned int thickness = 2;

bool z_aligned = false;

for (int i = 1; i < argc; i++) {

if (std::string(argv[i]) == "--pose_method" && i + 1 < argc) {

} else if (std::string(argv[i]) == "--tag_size" && i + 1 < argc) {

tagSize = atof(argv[i + 1]);

} else if (std::string(argv[i]) == "--input" && i + 1 < argc) {

input_filename = std::string(argv[i + 1]);

} else if (std::string(argv[i]) == "--quad_decimate" && i + 1 < argc) {

quad_decimate = (float)atof(argv[i + 1]);

} else if (std::string(argv[i]) == "--nthreads" && i + 1 < argc) {

nThreads = atoi(argv[i + 1]);

} else if (std::string(argv[i]) == "--intrinsic" && i + 1 < argc) {

intrinsic_file = std::string(argv[i + 1]);

} else if (std::string(argv[i]) == "--camera_name" && i + 1 < argc) {

camera_name = std::string(argv[i + 1]);

} else if (std::string(argv[i]) == "--display_tag") {

display_tag = true;

} else if (std::string(argv[i]) == "--color" && i + 1 < argc) {

color_id = atoi(argv[i + 1]);

} else if (std::string(argv[i]) == "--thickness" && i + 1 < argc) {

thickness = (unsigned int)atoi(argv[i + 1]);

} else if (std::string(argv[i]) == "--tag_family" && i + 1 < argc) {

} else if (std::string(argv[i]) == "--z_aligned") {

z_aligned = true;

} else if (std::string(argv[i]) == "--help" || std::string(argv[i]) == "-h") {

std::cout << "Usage: " << argv[0]

<< " [--input <input file>] [--tag_size <tag_size in m>]"

" [--quad_decimate <quad_decimate>] [--nthreads <nb>]"

" [--intrinsic <intrinsic file>] [--camera_name <camera name>]"

" [--pose_method <method> (0: HOMOGRAPHY, 1: HOMOGRAPHY_VIRTUAL_VS, "

" 2: DEMENTHON_VIRTUAL_VS, 3: LAGRANGE_VIRTUAL_VS, "

" 4: BEST_RESIDUAL_VIRTUAL_VS, 5: HOMOGRAPHY_ORTHOGONAL_ITERATION) (default: 0)]"

" [--tag_family <family> (0: TAG_36h11, 1: TAG_36h10 (DEPRECATED), 2: TAG_36ARTOOLKIT (DEPRECATED),"

" 3: TAG_25h9, 4: TAG_25h7 (DEPRECATED), 5: TAG_16h5, 6: TAG_CIRCLE21h7, 7: TAG_CIRCLE49h12,"

" 8: TAG_CUSTOM48h12, 9: TAG_STANDARD41h12, 10: TAG_STANDARD52h13) (default: 0)]"

" [--display_tag] [--color <color_id (0, 1, ...)>]"

" [--thickness <thickness>] [--z_aligned]"

" [--help]"

<< std::endl;

return EXIT_SUCCESS;

}

}

#ifdef VISP_HAVE_PUGIXML

if (!intrinsic_file.empty() && !camera_name.empty())

#endif

std::cout << cam << std::endl;

std::cout << "poseEstimationMethod: " << poseEstimationMethod << std::endl;

std::cout << "tagFamily: " << tagFamily << std::endl;

std::cout << "nThreads : " << nThreads << std::endl;

std::cout << "Z aligned: " << z_aligned << std::endl;

try {

#ifdef VISP_HAVE_X11

#elif defined(VISP_HAVE_GDI)

#elif defined(VISP_HAVE_OPENCV)

#endif

std::vector<vpHomogeneousMatrix> cMo_vec;

detector.

detect(I, tagSize, cam, cMo_vec);

std::stringstream ss;

ss <<

"Detection time: " << t <<

" ms for " << detector.

getNbObjects() <<

" tags";

std::vector<vpImagePoint> p = detector.

getPolygon(i);

std::size_t tag_id_pos = message.find("id: ");

if (tag_id_pos != std::string::npos) {

int tag_id = atoi(message.substr(tag_id_pos + 4).c_str());

ss.str("");

ss << "Tag id: " << tag_id;

}

for (size_t j = 0; j < p.size(); j++) {

std::ostringstream number;

number << j;

}

}

for (size_t i = 0; i < cMo_vec.size(); i++) {

}

std::cerr <<

"Catch an exception: " << e.

getMessage() << std::endl;

}

return EXIT_SUCCESS;

#else

(void)argc;

(void)argv;

return 0;

#endif

}

The default behavior is to detect 36h11 marker in AprilTag.pgm image, but –tag_family <family> option allows considering other tags. To see which are the options, just run:

$ ./tutorial-apriltag-detector --help

To detect multiple 36h11 tags in the AprilTag.pgm image that is provided just run:

$ ./tutorial-apriltag-detector

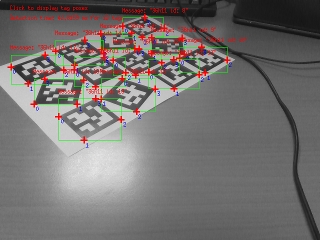

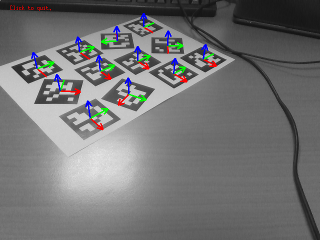

You will get the following result:

After a user click in the image, you will get the following image where the frames correspond to the 3D pose of each tag.

Now we explain the main lines of the source.

First we have to include the header corresponding to vpDetectorAprilTag class that allows detecting one or multiple tags.

#include <visp3/detection/vpDetectorAprilTag.h>

Then in the main() function before going further we need to check if ViSP was built with AprilTag 3rd party. We also check if ViSP is able to display images using either X11, or the Graphical Device Interface (GDI) under Windows, or OpenCV.

#if defined(VISP_HAVE_APRILTAG) && (defined(VISP_HAVE_X11) || defined(VISP_HAVE_GDI) || defined(VISP_HAVE_OPENCV))

After reading the input image AprilTag.pgm and the creation of a display device in order to visualize the image, a vpDetectorAprilTag detector is constructed with the requested family tag.

Then we are applying some settings. There is especially vpDetectorAprilTag::setAprilTagQuadDecimate() function that could be used to decimate the input image in order to speed-up the detection.

We are now ready to detect any 36h11 tags in the image. There is the vpDetectorAprilTag::detect(const vpImage<unsigned char> &) function that detects any tags in the image, but since here we want also to estimate the 3D pose of the tags, we call rather vpDetectorAprilTag::detect(const vpImage<unsigned char> &, const double, const vpCameraParameters &, std::vector<vpHomogeneousMatrix> &) that returns the pose of each tag as a vector of vpHomogeneousMatrix in cMo_vec variable.

std::vector<vpHomogeneousMatrix> cMo_vec;

detector.

detect(I, tagSize, cam, cMo_vec);

If one or more tags are detected, we can retrieve the number of detected tags in order to create a for loop over the tags.

For each tag, we can then get the location of the 4 points that define the polygon that contains the tag and the corresponding bounding box.

std::vector<vpImagePoint> p = detector.

getPolygon(i);

And finally, we are also able to get the tag id by calling vpDetectorAprilTag::getMessage() and parsing the returned message.

std::size_t tag_id_pos = message.find("id: ");

if (tag_id_pos != std::string::npos) {

int tag_id = atoi(message.substr(tag_id_pos + 4).c_str());

ss.str("");

ss << "Tag id: " << tag_id;

}

Next in the code we display the 3D pose of each tag as a RGB frame.

for (size_t i = 0; i < cMo_vec.size(); i++) {

}

- Note

- To get absolute pose (not relative to a scale factor), you have to provide the real size of the marker (length of a marker side).

- To calibrate your camera, you can follow this tutorial: Tutorial: Camera intrinsic calibration

AprilTag detection and pose estimation (live camera)

This other example also available in tutorial-apriltag-detector-live.cpp shows how to couple the AprilTag detector to an image grabber in order to detect tags on each new image acquired by a camera connected to your computer.

#include <visp3/core/vpConfig.h>

#ifdef VISP_HAVE_MODULE_SENSOR

#include <visp3/sensor/vpV4l2Grabber.h>

#include <visp3/sensor/vp1394CMUGrabber.h>

#include <visp3/sensor/vp1394TwoGrabber.h>

#include <visp3/sensor/vpFlyCaptureGrabber.h>

#include <visp3/sensor/vpRealSense2.h>

#endif

#include <visp3/detection/vpDetectorAprilTag.h>

#include <visp3/gui/vpDisplayGDI.h>

#include <visp3/gui/vpDisplayOpenCV.h>

#include <visp3/gui/vpDisplayX.h>

#include <visp3/core/vpXmlParserCamera.h>

int main(int argc, const char **argv)

{

#if defined(VISP_HAVE_APRILTAG) && \

(defined(VISP_HAVE_V4L2) || defined(VISP_HAVE_DC1394) || defined(VISP_HAVE_CMU1394) || (VISP_HAVE_OPENCV_VERSION >= 0x020100) || \

defined(VISP_HAVE_FLYCAPTURE) || defined(VISP_HAVE_REALSENSE2) )

int opt_device = 0;

double tagSize = 0.053;

float quad_decimate = 1.0;

int nThreads = 1;

std::string intrinsic_file = "";

std::string camera_name = "";

bool display_tag = false;

int color_id = -1;

unsigned int thickness = 2;

bool align_frame = false;

#if !(defined(VISP_HAVE_X11) || defined(VISP_HAVE_GDI) || defined(VISP_HAVE_OPENCV))

bool display_off = true;

std::cout << "Warning: There is no 3rd party (X11, GDI or openCV) to dislay images..." << std::endl;

#else

bool display_off = false;

#endif

for (int i = 1; i < argc; i++) {

if (std::string(argv[i]) == "--pose_method" && i + 1 < argc) {

} else if (std::string(argv[i]) == "--tag_size" && i + 1 < argc) {

tagSize = atof(argv[i + 1]);

} else if (std::string(argv[i]) == "--camera_device" && i + 1 < argc) {

opt_device = atoi(argv[i + 1]);

} else if (std::string(argv[i]) == "--quad_decimate" && i + 1 < argc) {

quad_decimate = (float)atof(argv[i + 1]);

} else if (std::string(argv[i]) == "--nthreads" && i + 1 < argc) {

nThreads = atoi(argv[i + 1]);

} else if (std::string(argv[i]) == "--intrinsic" && i + 1 < argc) {

intrinsic_file = std::string(argv[i + 1]);

} else if (std::string(argv[i]) == "--camera_name" && i + 1 < argc) {

camera_name = std::string(argv[i + 1]);

} else if (std::string(argv[i]) == "--display_tag") {

display_tag = true;

} else if (std::string(argv[i]) == "--display_off") {

display_off = true;

} else if (std::string(argv[i]) == "--color" && i + 1 < argc) {

color_id = atoi(argv[i+1]);

} else if (std::string(argv[i]) == "--thickness" && i + 1 < argc) {

thickness = (unsigned int) atoi(argv[i+1]);

} else if (std::string(argv[i]) == "--tag_family" && i + 1 < argc) {

} else if (std::string(argv[i]) == "--z_aligned") {

align_frame = true;

}

else if (std::string(argv[i]) == "--help" || std::string(argv[i]) == "-h") {

std::cout << "Usage: " << argv[0]

<< " [--camera_device <camera device> (default: 0)]"

<< " [--tag_size <tag_size in m> (default: 0.053)]"

" [--quad_decimate <quad_decimate> (default: 1)]"

" [--nthreads <nb> (default: 1)]"

" [--intrinsic <intrinsic file> (default: empty)]"

" [--camera_name <camera name> (default: empty)]"

" [--pose_method <method> (0: HOMOGRAPHY, 1: HOMOGRAPHY_VIRTUAL_VS, "

" 2: DEMENTHON_VIRTUAL_VS, 3: LAGRANGE_VIRTUAL_VS, "

" 4: BEST_RESIDUAL_VIRTUAL_VS, 5: HOMOGRAPHY_ORTHOGONAL_ITERATION) (default: 0)]"

" [--tag_family <family> (0: TAG_36h11, 1: TAG_36h10 (DEPRECATED), 2: TAG_36ARTOOLKIT (DEPRECATED),"

" 3: TAG_25h9, 4: TAG_25h7 (DEPRECATED), 5: TAG_16h5, 6: TAG_CIRCLE21h7, 7: TAG_CIRCLE49h12,"

" 8: TAG_CUSTOM48h12, 9: TAG_STANDARD41h12, 10: TAG_STANDARD52h13) (default: 0)]"

" [--display_tag] [--z_aligned]";

#if (defined(VISP_HAVE_X11) || defined(VISP_HAVE_GDI) || defined(VISP_HAVE_OPENCV))

std::cout << " [--display_off] [--color <color id>] [--thickness <line thickness>]";

#endif

std::cout << " [--help]" << std::endl;

return EXIT_SUCCESS;

}

}

try {

#ifdef VISP_HAVE_PUGIXML

if (!intrinsic_file.empty() && !camera_name.empty())

#endif

#if defined(VISP_HAVE_V4L2)

std::ostringstream device;

device << "/dev/video" << opt_device;

std::cout << "Use Video 4 Linux grabber on device " << device.str() << std::endl;

#elif defined(VISP_HAVE_DC1394)

(void)opt_device;

std::cout << "Use DC1394 grabber" << std::endl;

#elif defined(VISP_HAVE_CMU1394)

(void)opt_device;

std::cout << "Use CMU1394 grabber" << std::endl;

#elif defined(VISP_HAVE_FLYCAPTURE)

(void)opt_device;

std::cout << "Use FlyCapture grabber" << std::endl;

#elif defined(VISP_HAVE_REALSENSE2)

(void)opt_device;

std::cout << "Use Realsense 2 grabber" << std::endl;

rs2::config config;

config.disable_stream(RS2_STREAM_DEPTH);

config.disable_stream(RS2_STREAM_INFRARED);

config.enable_stream(RS2_STREAM_COLOR, 640, 480, RS2_FORMAT_RGBA8, 30);

std::cout << "Read camera parameters from Realsense device" << std::endl;

#elif defined(VISP_HAVE_OPENCV)

std::cout << "Use OpenCV grabber on device " << opt_device << std::endl;

cv::VideoCapture g(opt_device);

if (!g.isOpened()) {

std::cout << "Failed to open the camera" << std::endl;

return -1;

}

cv::Mat frame;

g >> frame;

#endif

std::cout << cam << std::endl;

std::cout << "poseEstimationMethod: " << poseEstimationMethod << std::endl;

std::cout << "tagFamily: " << tagFamily << std::endl;

std::cout << "nThreads : " << nThreads << std::endl;

std::cout << "Z aligned: " << align_frame << std::endl;

if (! display_off) {

#ifdef VISP_HAVE_X11

#elif defined(VISP_HAVE_GDI)

#elif defined(VISP_HAVE_OPENCV)

#endif

}

std::vector<double> time_vec;

for (;;) {

#if defined(VISP_HAVE_V4L2) || defined(VISP_HAVE_DC1394) || defined(VISP_HAVE_CMU1394) || defined(VISP_HAVE_FLYCAPTURE) || defined(VISP_HAVE_REALSENSE2)

#elif defined(VISP_HAVE_OPENCV)

g >> frame;

#endif

std::vector<vpHomogeneousMatrix> cMo_vec;

detector.

detect(I, tagSize, cam, cMo_vec);

time_vec.push_back(t);

std::stringstream ss;

ss <<

"Detection time: " << t <<

" ms for " << detector.

getNbObjects() <<

" tags";

for (size_t i = 0; i < cMo_vec.size(); i++) {

}

break;

}

std::cout << "Benchmark computation time" << std::endl;

if (! display_off)

delete d;

std::cerr <<

"Catch an exception: " << e.

getMessage() << std::endl;

}

return EXIT_SUCCESS;

#else

(void)argc;

(void)argv;

#ifndef VISP_HAVE_APRILTAG

std::cout << "Enable Apriltag support, configure and build ViSP to run this tutorial" << std::endl;

#else

std::cout << "Install a 3rd party dedicated to frame grabbing (dc1394, cmu1394, v4l2, OpenCV, FlyCapture, Realsense2), configure and build ViSP again to use this example" << std::endl;

#endif

#endif

return EXIT_SUCCESS;

}

The usage of this example is similar to the previous one:

- with option

–tag_family you select the kind of tag that you want to detect.

- if more than one camera is connected to you computer, with option

–input you can select which camera to use. The first camera that is found has number 0.

To detect 36h11 tags on images acquired by a second camera connected to your computer use:

$ ./tutorial-apriltag-detector-live --tag_family 0 --input 1

The source code of this example is very similar to the previous one except that here we use camera framegrabber devices (see Tutorial: Image frame grabbing). Two different grabber may be used:

- If ViSP was built with Video For Linux (V4L2) support available for example on Fedora or Ubuntu distribution, VISP_HAVE_V4L2 macro is defined. In that case, images coming from an USB camera are acquired using vpV4l2Grabber class.

- If ViSP wasn't built with V4L2 support but with OpenCV, we use cv::VideoCapture class to grab the images. Notice that when images are acquired with OpenCV there is an additional conversion from cv::Mat to vpImage.

#if defined(VISP_HAVE_V4L2)

std::ostringstream device;

device << "/dev/video" << opt_device;

std::cout << "Use Video 4 Linux grabber on device " << device.str() << std::endl;

#elif defined(VISP_HAVE_DC1394)

(void)opt_device;

std::cout << "Use DC1394 grabber" << std::endl;

#elif defined(VISP_HAVE_CMU1394)

(void)opt_device;

std::cout << "Use CMU1394 grabber" << std::endl;

#elif defined(VISP_HAVE_FLYCAPTURE)

(void)opt_device;

std::cout << "Use FlyCapture grabber" << std::endl;

#elif defined(VISP_HAVE_REALSENSE2)

(void)opt_device;

std::cout << "Use Realsense 2 grabber" << std::endl;

rs2::config config;

config.disable_stream(RS2_STREAM_DEPTH);

config.disable_stream(RS2_STREAM_INFRARED);

config.enable_stream(RS2_STREAM_COLOR, 640, 480, RS2_FORMAT_RGBA8, 30);

std::cout << "Read camera parameters from Realsense device" << std::endl;

#elif defined(VISP_HAVE_OPENCV)

std::cout << "Use OpenCV grabber on device " << opt_device << std::endl;

cv::VideoCapture g(opt_device);

if (!g.isOpened()) {

std::cout << "Failed to open the camera" << std::endl;

return -1;

}

cv::Mat frame;

g >> frame;

#endif

Then in the while loop, at each iteration we acquire a new image

#if defined(VISP_HAVE_V4L2) || defined(VISP_HAVE_DC1394) || defined(VISP_HAVE_CMU1394) || defined(VISP_HAVE_FLYCAPTURE) || defined(VISP_HAVE_REALSENSE2)

#elif defined(VISP_HAVE_OPENCV)

g >> frame;

#endif

This new image is then given as input to the AprilTag detector.

Improve pose estimation accuracy using a RGB-D camera

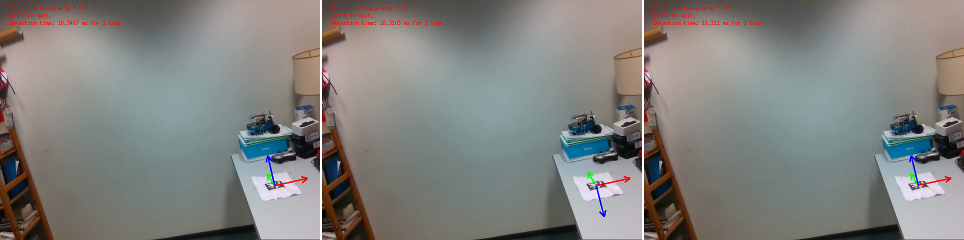

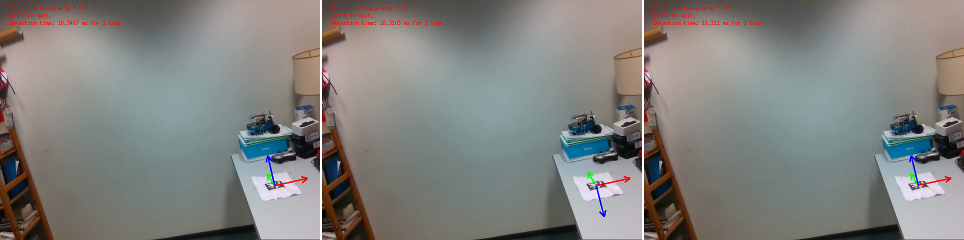

When the tag is small in the image or when the tag corners location are extracted poorly, you may experience z-axis flipping if you analyse carefully the pose of the tag. The following images illustrate this behavior.

These are 3 successive images acquired by the Realsense D435 color camera with tag pose in overlay. In the image in the middle, you can see the z-axis flipping phenomena where z-axis in blue is not oriented as expected.

The pose is computed from the 4 tag corners location, assuming a planar object, and this behavior is inherent to the planar pose estimation ambiguity, see Dementhon.

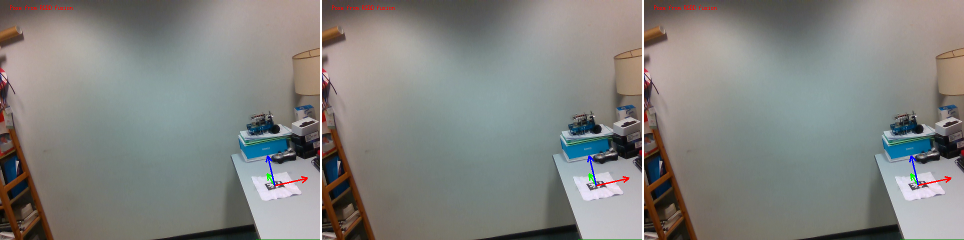

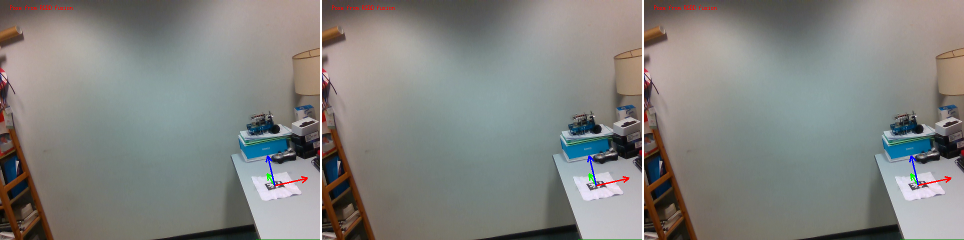

To lift this ambiguity, we propose to use the depth map of a RGB-D sensor in order to bring additional 3D information. The pose estimation can then be seen as a 3D-3D optimization process implemented in vpPose::computePlanarObjectPoseFromRGBD(). As shown in the following images, using this method with a Realsense D435 sensor allows to overcome this ambiguity.

These are the same 3 successive images acquired by the Realsense color camera with resulting pose in overlay, but here we used depth map aligned with color image given as input to vpPose::computePlanarObjectPoseFromRGBD(). As you can see in the 3 images z-axis flipping phenomena doesn't occur any more.

An example that shows how to use this function is given in tutorial-apriltag-detector-live-rgbd-realsense.cpp. In this example we are using a Realsense D435 or equivalent RGB-D sensor, but it could be adapted to any other RGB-D sensor as long as you can align depth map and color image.

std::vector<std::vector<vpImagePoint> > tags_corners = detector.

getPolygon();

std::vector<int> tags_id = detector.

getTagsId();

std::map<int, double> tags_size;

tags_size[-1] = tagSize;

std::vector<std::vector<vpPoint> > tags_points3d = detector.

getTagsPoints3D(tags_id, tags_size);

for (size_t i = 0; i < tags_corners.size(); i++) {

double confidence_index;

if (confidence_index > 0.5) {

}

else if (confidence_index > 0.25) {

}

else {

}

std::stringstream ss;

ss << "Tag id " << tags_id[i] << " confidence: " << confidence_index;

}

}

Next tutorial

You are now ready to see the Tutorial: Bar code detection, that illustrates how to detect QR codes in an image.