|

Visual Servoing Platform

version 3.0.1

|

|

Visual Servoing Platform

version 3.0.1

|

This tutorial describes the model-based tracking of objects using simultaneously multiple cameras views. It allows to simultaneously track the object in the images viewed by a set of cameras while providing its 3D localization (i.e., the object pose expressed in a reference camera frame) when calibrated cameras are used.

The software allows the tracking of a markerless object using the knowledge of its CAD model. Considered objects have to be modeled by segments, circles or cylinders. The model of the object could be defined in vrml format (except for circles), or in cao format.

Next section highlights the different versions of the markerless multi-view model-based trackers that have been developed. The multi-view model-based tracker can consider moving-edges behind the lines of the model (thanks to vpMbEdgeMultiTracker class). It can also consider keypoints that are detected and tracked on each visible face of the model (thanks to vpMbKltMultiTracker class). The tracker can also handle moving-edges and keypoints in a hybrid scheme (thanks to vpMbEdgeKltMultiTracker class).

While the multi-view model-based edges tracker implemented in vpMbEdgeMultiTracker is appropriate to track texture less objects, the multi-view model-based keypoints tracker implemented in vpMbKltMultiTracker is more designed to exploit textured objects with edges that are not really visible. The multi-view model-based hybrid tracker implemented in vpMbEdgeKltMultiTracker is appropriate to track textured objects with visible edges.

These classes allow tracking the same object as two cameras or more sees it. The main advantages of this configuration with respect to the mono-camera case (see Tutorial: Markerless model-based tracking) concern:

In order to achieve this, the following information are required:

.

.In the following sections, we consider the tracking of a tea box modeled in cao format. A stereo camera sees this object. Thus to illustrate the behavior of the tracker, the following video shows the resulting tracking performed with vpMbEdgeMultiTracker. In this example the fixed cameras located on Romeo Humanoid robot head captured the images.

This other video shows the behavior of the hybrid tracking performed with vpMbEdgeKltMultiTracker where features are the teabox edges and the keypoints on the visible faces.

Next sections will highlight how to easily adapt your code to use multiple cameras with the model-based tracker. As only the new methods dedicated to multiple views tracking will be presented, you are highly recommended to follow Tutorial: Markerless model-based tracking in order to be familiar with the model-based tracking concepts, the different trackers that are available in ViSP (the edge tracker: vpMbEdgeTracker, the klt feature points tracker: vpMbKltTracker and the hybrid tracker: vpMbEdgeKltTracker) and with the configuration loading part.

Note that all the material (source code and video) described in this tutorial is part of ViSP source code and could be downloaded using the following command:

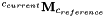

The model-based trackers available for multiple views tracking rely on the same trackers than in the monocular case:

The following class diagram offers an overview of the hierarchy between the different classes:

The vpMbEdgeMultiTracker class inherits from the vpMbEdgeTracker class, the vpMbKltMultiTracker inherits from the vpMbKltTracker class and the vpMbEdgeKltMultiTracker class inherits from the vpMbEdgeMultiTracker and vpMbKltMultiTracker classes. This conception permits to easily extend the usage of the model-based tracker to multiple cameras with the guarantee to preserve the same behavior compared to the tracking in the monocular configuration (more precisely, only the model-based edge and the model-based klt should have the same behavior, the hybrid multi class has a slight different implementation that will lead to minor differences compared to vpMbEdgeKltTracker).

As you will see after, the principal methods present in the parent class are accessible and used for single view tracking. Lot of new overridden methods have been introduced to deal with the different cameras configuration (single camera, stereo cameras and multiple cameras).

Each tracker is stored in a map, the key corresponding to the name of the camera on which the tracker will process. By default, the camera names are set to:

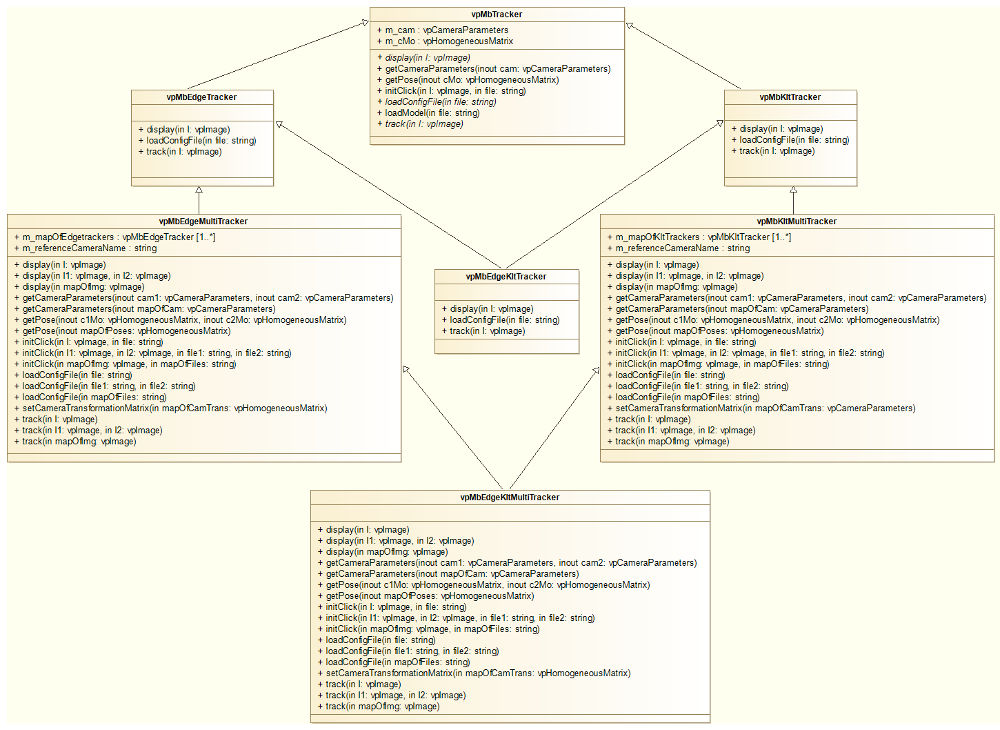

To deal with multiple cameras, in the virtual visual servoing control law we concatenate all the interaction matrices and residual vectors and transform them in a single reference camera frame to compute the reference camera velocity. Thus, we have to know the transformation matrix between each camera and the reference camera.

For example, if the reference camera is "Camera1" (  ), we need the following information:

), we need the following information:  .

.

Each essential method used to initialize the tracker and process the tracking have three signatures in order to ease the call to the method and according to three working modes:

The following table sums up how to call the different methods based on the camera configuration for the main functions.

| Method calling example: | Monocular case | Stereo case | Multiple cameras case | Remarks |

|---|---|---|---|---|

| Construct a model-based edge tracker: | vpMbEdgeMultiTracker tracker | vpMbEdgeMultiTracker tracker(2) | vpMbEdgeMultiTracker tracker(5) | The default constructor corresponds to the monocular configuration. |

| Load a configuration file: | tracker.loadConfigFile("config.xml") | tracker.loadConfigFile("config1.xml", "config2.xml") | tracker.loadConfigFile(mapOfConfigFiles) | Each tracker can have different parameters (intrinsic parameters, visibility angles, etc.). |

| Load a model file: | tracker.loadModel("model.cao") | tracker.loadModel("model.cao") | tracker.loadModel("model.cao") | All the trackers must used the same 3D model. |

| Get the intrinsic camera parameters: | tracker.getCameraParameters(cam) | tracker.getCameraParameters(cam1, cam2) | tracker.getCameraParameters(mapOfCam) | |

| Set the transformation matrix between each camera and the reference one: | tracker.setCameraTransformationMatrix(mapOfCamTrans) | tracker.setCameraTransformationMatrix(mapOfCamTrans) | For the reference camera, the identity homogeneous matrix must be set. | |

| Setting to display the features: | tracker.setDisplayFeatures(true) | tracker.setDisplayFeatures(true) | tracker.setDisplayFeatures(true) | This is a general parameter. |

| Initialize the pose by click: | tracker.initClick(I, "f_init.init") | tracker.initClick(I1, I2, "f_init1.init", "f_init2.init") | tracker.initClick(mapOfImg, mapOfInitFiles) | If the transformation matrices between the cameras have been set, some init files can be omitted as long as the reference camera has an init file. |

| Track the object: | tracker.track(I) | tracker.track(I1, I2) | tracker.track(mapOfImg) | |

| Get the pose: | tracker.getPose(cMo) | tracker.getPose(c1Mo, c2Mo) | tracker.getPose(mapOfPoses) | tracker.getPose(cMo) will return the pose for the reference camera in the multiple cameras configurations. |

| Display the model: | tracker.display(I, cMo, cam, ...) | tracker.display(I1, I2, c1Mo, c2Mo, cam1, cam2, ...) | tracker.display(mapOfImg, mapOfPoses, mapOfCam) |

The following example comes from tutorial-mb-tracker-stereo.cpp and allows to track a tea box modeled in cao format using one of the three multi-view markerless trackers implemented in ViSP. In this example we consider a stereo configuration.

Once built, to choose which tracker to use, run the binary with the following argument:

The source code is the following:

The previous source code shows how to implement model-based tracking on stereo images using the standard procedure to configure the tracker:

Please refer to the tutorial Tutorial: Markerless model-based tracking in order to have explanations on the configuration parameters and for information on how to model an object in a ViSP compatible format.

To test the three kind of trackers, only vpMbEdgeKltMultiTracker.h header is required as the others (vpMbEdgeMultiTracker.h and vpMbKltMultiTracker.h) are already included in the hybrid header class.

We declare two images for the left and right camera views.

To construct a stereo tracker, we have to specify the desired number of cameras (in our case 2) as argument given to the tracker constructors:

All the configuration parameters for the tracker are stored in xml configuration files. To load the different files, we use:

The following code is used in order to retrieve the intrinsic camera parameters:

To load the 3D object model, we use:

We can also use the following setting that enables the display of the features used during the tracking:

We have to set the transformation matrices between the cameras and the reference camera to be able to compute the control law in a reference camera frame. In the code we consider the left camera with name "Camera1" as the reference camera. For the right camera with name "Camera2" we have to set the transformation (  ). This transformation is read from cRightMcLeft.txt file. Since our left and right cameras are not moving, this transformation is constant and has not to be updated in the tracking loop:

). This transformation is read from cRightMcLeft.txt file. Since our left and right cameras are not moving, this transformation is constant and has not to be updated in the tracking loop:

The initial pose is set by clicking on specific points in the image:

The poses for the left and right views have to be declared:

The tracking is done by:

The poses for each camera are retrieved with:

To display the model with the estimated pose, we use:

Finally, do not forget to delete the pointers:

The principle remains the same than with static cameras. You have to supply the camera transformation matrices to the tracker each time the cameras move and before calling the track method:

This information can be available through the robot kinematics and different kind of sensors for example.

The following video shows how the result of a stereo hybrid model-based tracking based on object edges and keypoints located on visible faces could be used to servo Romeo humanoid robot eyes to gaze the object. Here the images were captured by Romeo eyes that are moving.

You are now ready to see the next Tutorial: Template tracking.