|

Visual Servoing Platform

version 3.6.1 under development (2025-02-28)

|

|

Visual Servoing Platform

version 3.6.1 under development (2025-02-28)

|

Note that all the material (source code and data) described in this tutorial is part of ViSP source code (in tutorial/computer-vision folder) and could be found in https://github.com/lagadic/visp/tree/master/tutorial/computer-vision.

This tutorial which source code is available in tutorial-pose-from-planar-object.cpp shows a way to estimate the pose (cMo) of a planar object pose exploiting color and depth images using a RGB-D camera and the object 3D model.

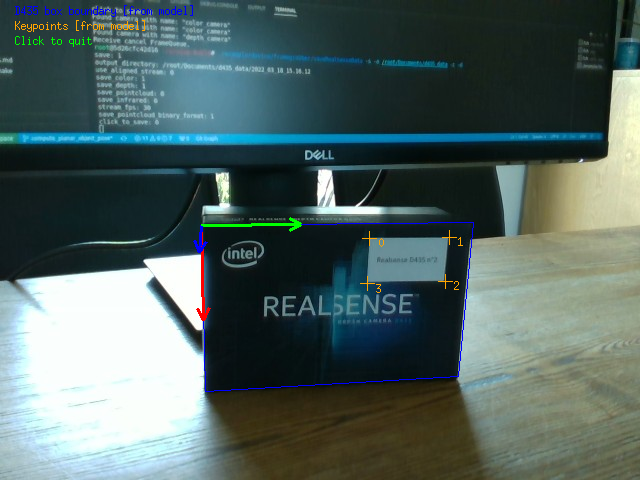

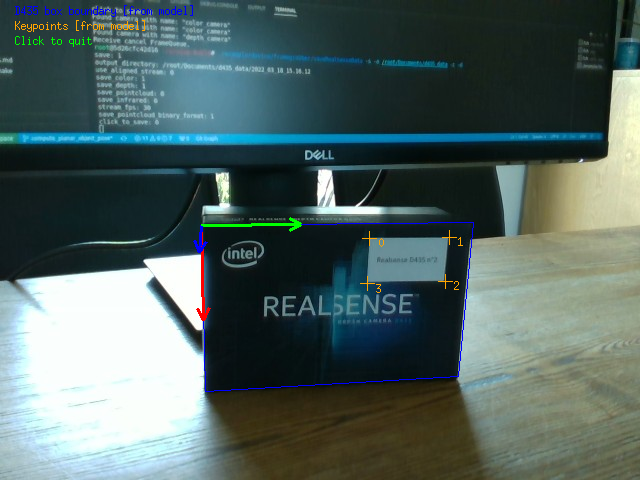

To illustrate this capability, we will use the next image to estimate the pose of the white object delimited by the orange crosses from 1 to 4. The coordinates of these four crosses define the CAD model of our object. They are expressed in the frame visible in the following image. This object is lying on a larger plane delimited by the blue rectangle that corresponds to the Realsense cover box. The coordinates of the plane bounds are expressed in the same frame.

HeTo resume:

In this tutorial, an Intel D435 was used to capture the data. Furthermore, we created a 3D CAD model file available in data/d435_box.model that contains the coordinates of the plane bounds and the coordinates of the object corners.

# Bounds # - Contains a list of 3D points (X Y Z) corresponding to the bounds of the plane to consider Bounds data: - [0, 0, 0] # pt 0: top left point - [0, 0.143, 0] # pt 1: top right point - [0.091, 0.143, 0] - [0.091, 0, 0] # Keypoints # - Contains a list of 3D points (X Y Z) corresponding to the keypoints # - These points are defined in origin frame and represent the white sticker corners Keypoints data: - [0.008, 0.086, 0] - [0.008, 0.130, 0] - [0.033, 0.130, 0] - [0.033, 0.086, 0]

Those data are loaded such as:

Finally, in order to compute the object pose, we need:

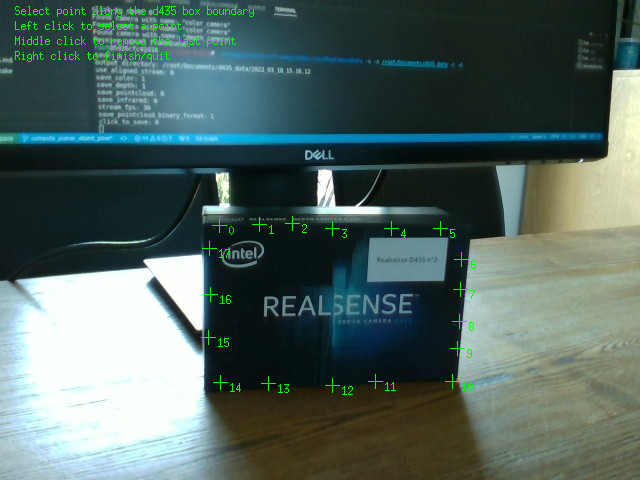

The first step is to estimate the object plane thanks to the depth data. In this tutorial, the user has to delimitate this area such as:

Then, based on the user selected points, a convex hull is created and projected to the depth frame.

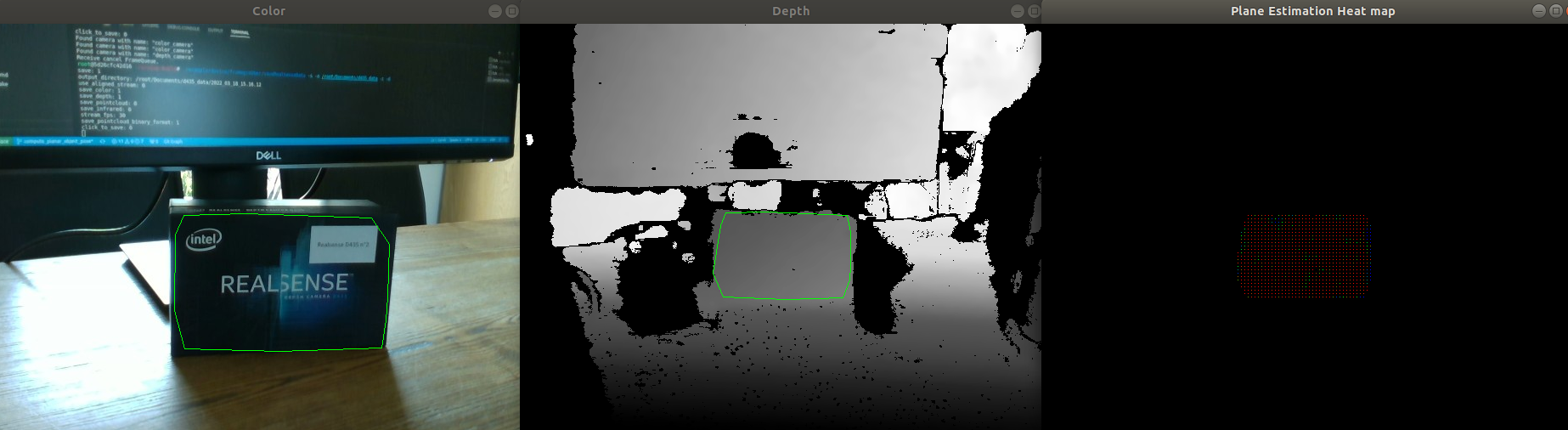

Once, the plane ROI is defined on the depth frame, the plane is estimated. Firstly in the depth frame. Then, the depth-frame-located plane is projected to the color frame.

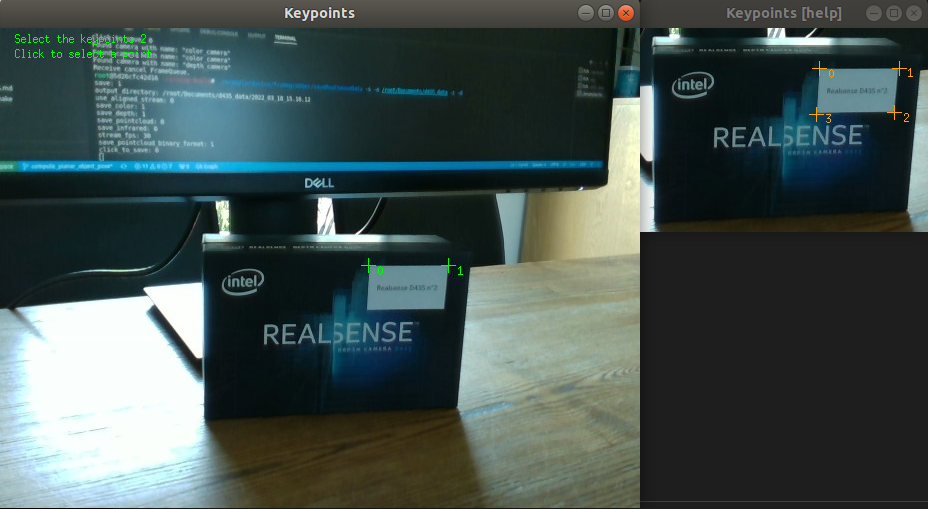

Here, the user is simulating an automatic corner detection and the detection/model matching.

Finally, the object pose (i.e., the relation ship between the object and the color optical frame) is computed thanks to:

You can continue with the Tutorial: Pose estimation from points or with the Tutorial: Homography estimation from points.