|

Visual Servoing Platform

version 3.6.1 under development (2024-11-16)

|

|

Visual Servoing Platform

version 3.6.1 under development (2024-11-16)

|

This tutorial follows the Tutorial: AprilTag marker detection and shows how AprilTag marker detection could be achieved with ViSP on iOS devices.

In the next section you will find an example that show how to detect tags in a single image. To know how to print an AprilTag marker, see Print an AprilTag marker.

Note that all the material (Xcode project and image) described in this tutorial is part of ViSP source code (in tutorial/ios/StartedAprilTag folder) and could be found in https://github.com/lagadic/visp/tree/master/tutorial/ios/StartedAprilTag.

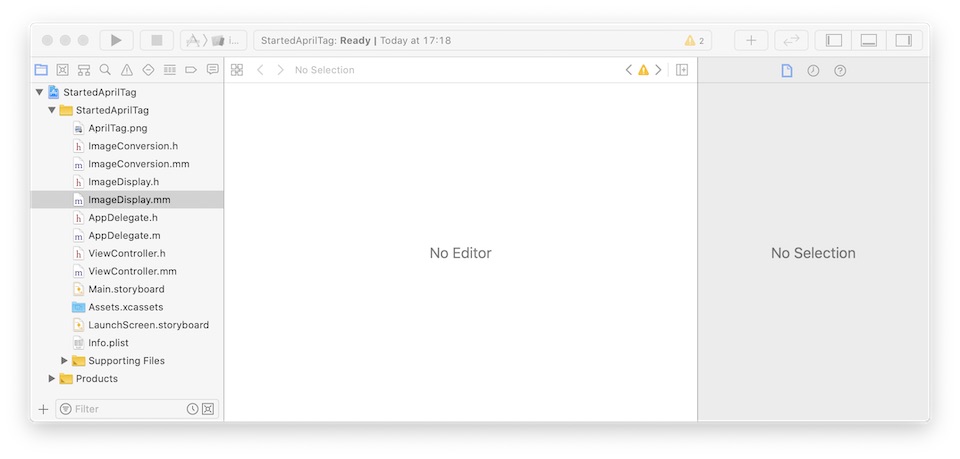

Let us consider the Xcode project named StartedAprilTag that is part of ViSP source code and located in $VISP_WS/tutorial/ios/StartedAprilTag. This project is a Xcode "Single view application" that contain ImageConversion.h and ImageConversion.mm to convert from/to UIImage to ViSP images (see Image conversion functions). It contains also ImageDisplay.h and ImageDisplay.mm files useful to display lines and frames in an image overlay, ViewController.mm that handles the tag detection, and an image AprilTag.png used as input.

To open this application, if you followed Tutorial: Installation from prebuilt packages for iOS devices simply run:

$ cd $HOME/framework

download the content of https://github.com/lagadic/visp/tree/master/tutorial/ios/StartedAprilTag and run

$ open StartedAprilTag -a Xcode

or if you already downloaded ViSP following Tutorial: Installation from source for iOS devices run:

$ open $HOME/framework/visp/tutorial/ios/StartedAprilTag -a Xcode

Here you should see something similar to:

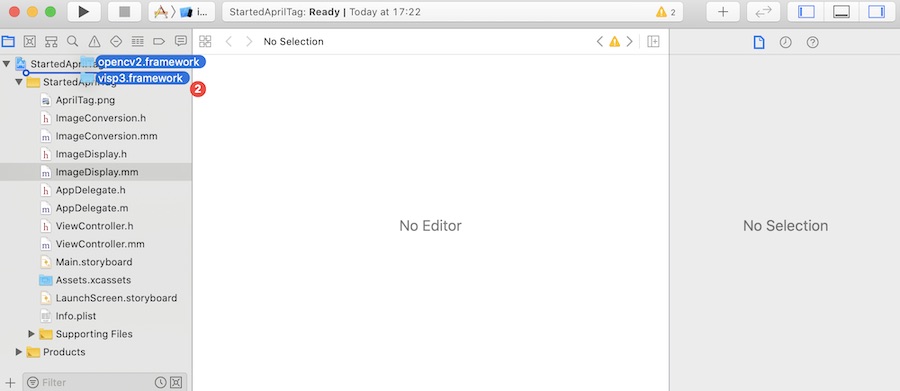

Once opened, you have just to drag & drop ViSP and OpenCV frameworks available in $HOME/framework/ios if you followed Tutorial: Installation from prebuilt packages for iOS devices.

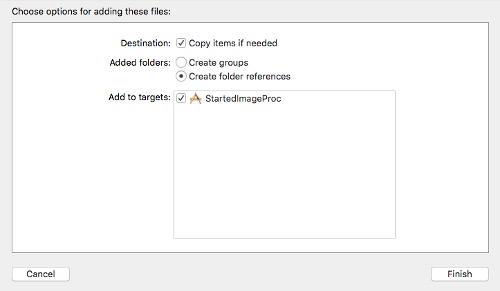

In the dialog box, enable check box "Copy item if needed" to add visp3.framework and opencv2.framework to the project.

Now you should be able to build and run your application.

The Xcode project StartedAprilTag contains ImageDisplay.h and ImageDisplay.mm files that implement the functions to display a line or a frame in overlay of an UIImage.

The following function implemented in ImageDisplay.mm show how to display a line.

The following function implemented in ImageDisplay.mm show how to display a 3D frame; red line for x, green for y and blue for z axis.

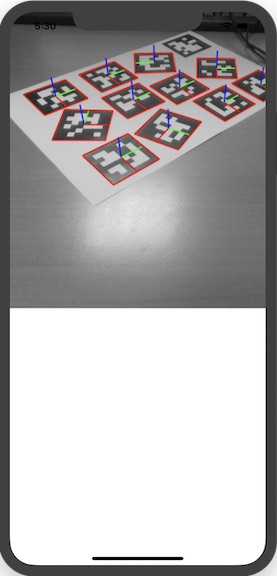

StartedAprilTag application using Xcode "Product > Build" menu.StartedAprilTag application on your device, you should be able to see the following screen shot:

Follow iOS error: libxml/parser.h not found link if you get this issue.

You are now ready to follow Tutorial: AprilTag marker real-time detection on iOS that shows how to detect in realtime AprilTags from your iOS device camera.