This tutorial supposes that you have followed the Tutorial: How to create a basic iOS application that uses ViSP.

Introduction

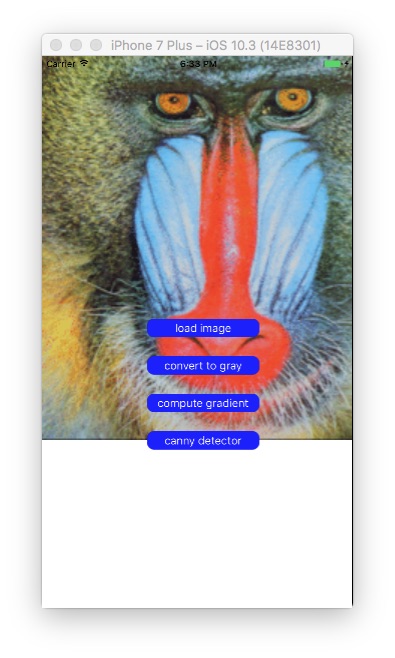

In this tutorial you will learn how to do simple image processing on iOS devices with ViSP. This application loads a color image (monkey.png) and allows the user to visualize either this image in grey level, either the image gradients, or either canny edges on iOS simulator or devices.

In ViSP images are carried out using vpImage class. However in iOS, image rendering has to be done using UIImage class that is part of the Core Graphics framework available in iOS. In this tutorial we provide the functions that allow to convert a vpImage to an UIImage and vice versa.

Note that all the material (source code and image) used in this tutorial is part of ViSP source code and could be downloaded using the following command:

StartedImageProc application

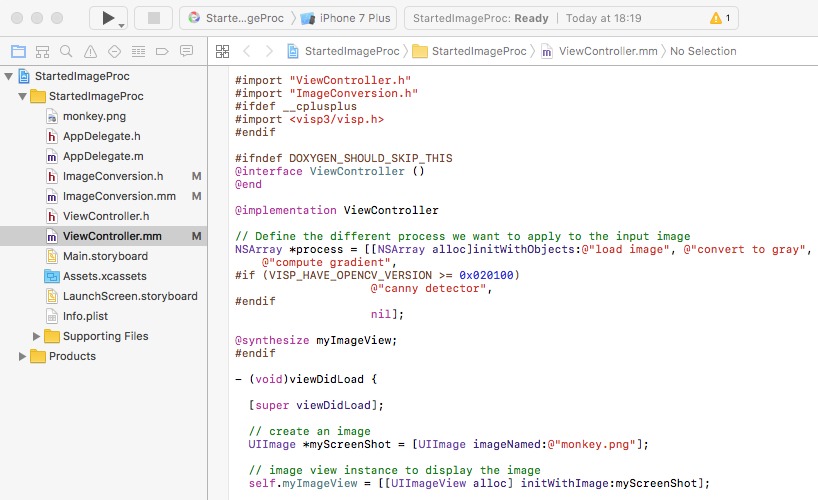

Let us consider the Xcode project named StartedImageProc that is part of ViSP source code. This project is a Xcode "Single view application" where we renamed ViewController.m into ViewController.mm, introduced minor modifications in ViewController.h and add monkey.png image.

To download the complete StartedImageProc project, run the following command:

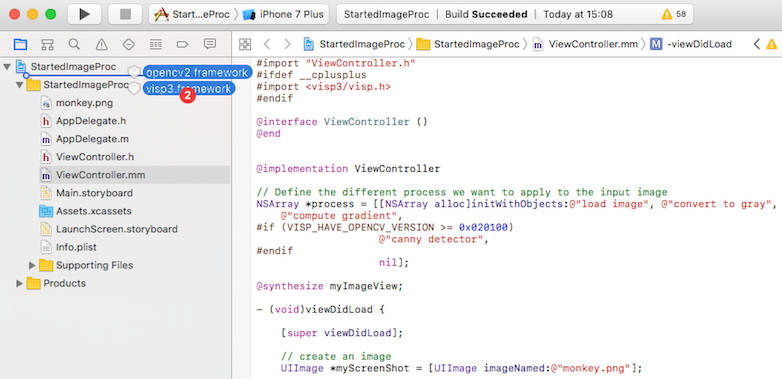

Once downloaded, you have just to drag & drop ViSP and OpenCV frameworks available following Tutorial: Installation from prebuilt packages for iOS devices.

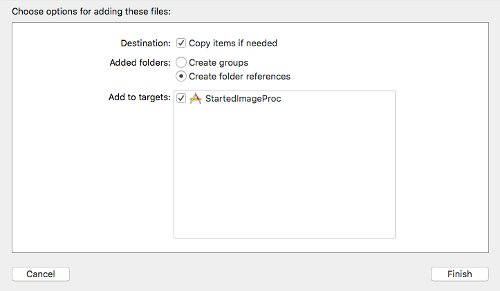

In the dialog box, enable check box "Copy item if needed" to add visp3.framework and opencv.framework to the project.

Now you should be able to build and run your application.

Image conversion functions

The Xcode project StartedImageProc contains ImageConversion.h and ImageConversion.mm files that implement the functions to convert UIImage to ViSP vpImage and vice versa.

UIImage to color vpImage

The following function implemented in ImageConversion.mm show how to convert an UIImage into a vpImage<vpRGBa> instanciated as a color image.

{

CGColorSpaceRef colorSpace = CGImageGetColorSpace(image.CGImage);

if (CGColorSpaceGetModel(colorSpace) == kCGColorSpaceModelMonochrome) {

NSLog(@"Input UIImage is grayscale");

CGContextRef contextRef = CGBitmapContextCreate(gray.bitmap,

image.size.width,

image.size.height,

8,

image.size.width,

colorSpace,

kCGImageAlphaNone |

kCGBitmapByteOrderDefault);

CGContextDrawImage(contextRef, CGRectMake(0, 0, image.size.width, image.size.height), image.CGImage);

CGContextRelease(contextRef);

return color;

}

else {

NSLog(@"Input UIImage is color");

colorSpace = CGColorSpaceCreateDeviceRGB();

CGContextRef contextRef = CGBitmapContextCreate(color.bitmap,

image.size.width,

image.size.height,

8,

4 * image.size.width,

colorSpace,

kCGImageAlphaNoneSkipLast |

kCGBitmapByteOrderDefault);

CGContextDrawImage(contextRef, CGRectMake(0, 0, image.size.width, image.size.height), image.CGImage);

CGContextRelease(contextRef);

return color;

}

}

UIImage to gray vpImage

The following function implemented in ImageConversion.mm show how to convert an UIImage into a vpImage<unsigned char> instanciated as a grey level image.

{

CGColorSpaceRef colorSpace = CGImageGetColorSpace(image.CGImage);

if (CGColorSpaceGetModel(colorSpace) == kCGColorSpaceModelMonochrome) {

NSLog(@"Input UIImage is grayscale");

CGContextRef contextRef = CGBitmapContextCreate(gray.bitmap,

image.size.width,

image.size.height,

8,

image.size.width,

colorSpace,

kCGImageAlphaNone |

kCGBitmapByteOrderDefault);

CGContextDrawImage(contextRef, CGRectMake(0, 0, image.size.width, image.size.height), image.CGImage);

CGContextRelease(contextRef);

return gray;

} else {

NSLog(@"Input UIImage is color");

colorSpace = CGColorSpaceCreateDeviceRGB();

CGContextRef contextRef = CGBitmapContextCreate(color.bitmap,

image.size.width,

image.size.height,

8,

4 * image.size.width,

colorSpace,

kCGImageAlphaNoneSkipLast |

kCGBitmapByteOrderDefault);

CGContextDrawImage(contextRef, CGRectMake(0, 0, image.size.width, image.size.height), image.CGImage);

CGContextRelease(contextRef);

return gray;

}

}

Color vpImage to UIImage

The following function implemented in ImageConversion.mm show how to convert a gray level vpImage<unsigned char> into an UIImage.

{

NSData *data = [NSData dataWithBytes:I.

bitmap length:I.

getSize()*4];

CGColorSpaceRef colorSpace;

colorSpace = CGColorSpaceCreateDeviceRGB();

CGDataProviderRef provider = CGDataProviderCreateWithCFData((__bridge CFDataRef)data);

CGImageRef imageRef = CGImageCreate(I.

getWidth(),

8,

8 * 4,

colorSpace,

kCGImageAlphaNone|kCGBitmapByteOrderDefault,

provider,

NULL,

false,

kCGRenderingIntentDefault

);

UIImage *finalImage = [UIImage imageWithCGImage:imageRef];

CGImageRelease(imageRef);

CGDataProviderRelease(provider);

CGColorSpaceRelease(colorSpace);

return finalImage;

}

Gray vpImage to UIImage

The following function implemented in ImageConversion.mm show how to convert a color vpImage<vpRGBa> into an UIImage.

{

NSData *data = [NSData dataWithBytes:I.

bitmap length:I.

getSize()];

CGColorSpaceRef colorSpace;

colorSpace = CGColorSpaceCreateDeviceGray();

CGDataProviderRef provider = CGDataProviderCreateWithCFData((__bridge CFDataRef)data);

CGImageRef imageRef = CGImageCreate(I.

getWidth(),

8,

8,

colorSpace,

kCGImageAlphaNone|kCGBitmapByteOrderDefault,

provider,

NULL,

false,

kCGRenderingIntentDefault

);

UIImage *finalImage = [UIImage imageWithCGImage:imageRef];

CGImageRelease(imageRef);

CGDataProviderRelease(provider);

CGColorSpaceRelease(colorSpace);

return finalImage;

}

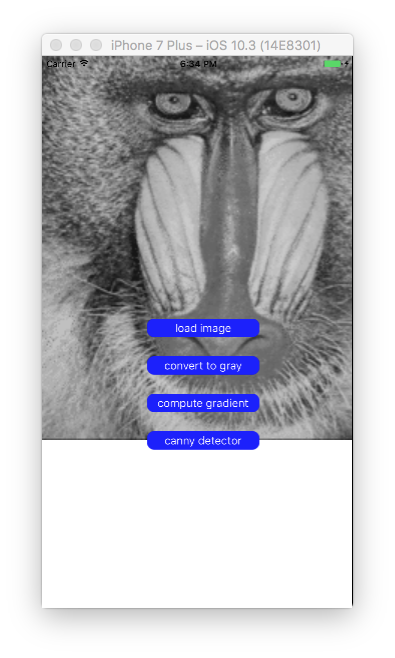

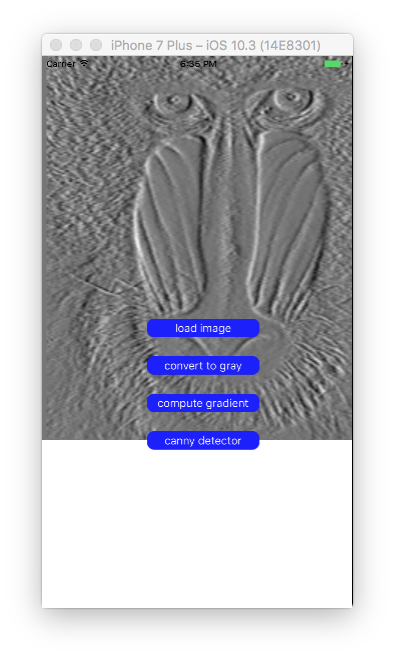

Application output

Once build, if you run StartedImageProc application on your device, you should be able to see the following screen shots.

- Selecting

"load image" gives the following result:

- Selecting

"convert to gray" gives the following result:

- Selecting

"compute gradient" gives the following result:

- Selecting

"canny detector" gives the following result:

Next tutorial

You are now ready to see the Tutorial: AprilTag marker detection on iOS.