|

ViSP

2.10.0

|

|

ViSP

2.10.0

|

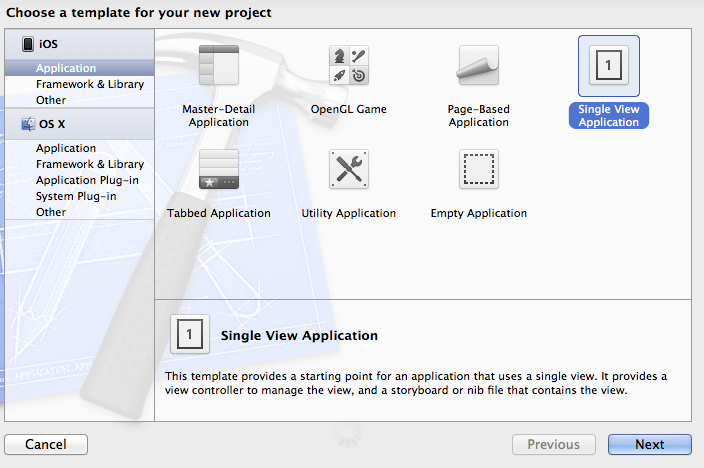

Create a new single view Xcode project.

Add <lib_folder> a lib folder in your project.

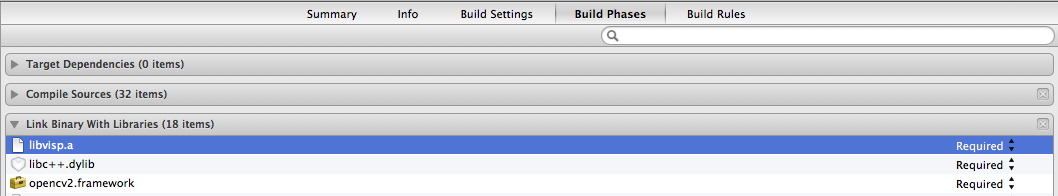

Copy the libvisp.a file in <lib_folder>. You'll find libvisp.a in the VISP.xcodeproj folder in lib –> Debug.

Go to your Xcode project Settings and edit the Build Phases by adding libvisp.a in "Link Binary With Libraries".

Add <header_folder> a header folder in your project.

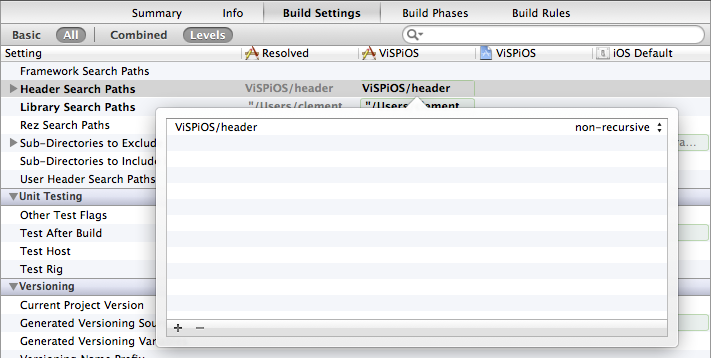

Go to ViSP source code in "include" folder and copy the "visp" folder in your project <header_folder>.

Go to your Xcode project Settings and edit the Build Settings by adding your <header_folder> path in the "Header Search Paths".

Because we will mix Objective C and C++ Code, rename your ViewController.m in ViewController.mm.

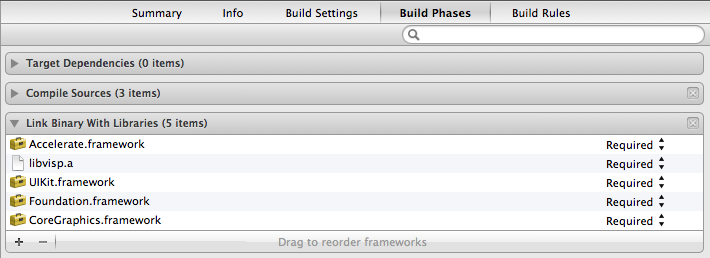

You will also have to add the Accelerate.framework in your project in order to be able to execute the specific visp code we will use. Go to your Xcode project Settings and edit the Build Phases by adding Accelerate.framework in "Link Binary With Libraries".

Here is the detailed explanation of the source, line by line :

Include all the headers for homography computation in ViewController.mm.

Create a new method with the homography computation from visp.

Call the method in the viewDidLoad of your ViewController.

You can now Build and Run your code (Simulator or device does not bother because we are just executing code).

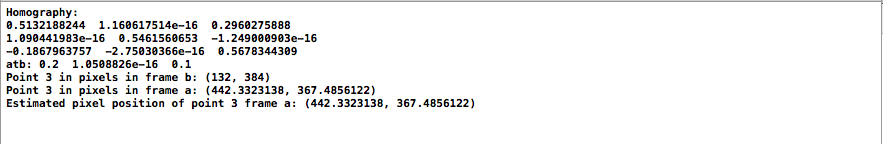

You should obtain these logs showing that visp code was correctly executed by your iOS project.